Many processes cause more problems than they solve. Tom Sedge shows how to tailor your own.

For a long time I’ve been searching for better ways to go about the business of software development, particularly when what I thought were successful developments, produced on time and budget, still encountered many problems in production. Sometimes it has seemed as if it is only possible to know what should have been built after several years and versions getting it wrong. Before I came across Systems Thinking it hadn’t occurred to me that perhaps I was looking in the wrong place, forever focussing on the design and coding process, requirements or methodology and not the bigger picture of the problem I was trying to solve.

This article isn’t just aimed at managers and decision-makers in software companies. It’s aimed at senior developers, architects and team leads too. Towards the end, I’ll explain how you can make use of Systems Thinking even if you work in an environment that may be largely beyond your control, and give you some pointers on how you might be able to persuade the decision-makers to give it a chance.

Please note that throughout this article I am presenting my own personal understanding and explanations of the subject. Please do explore the links and references in the endnotes for other perspectives.

The state of software development

There’s much that is good about software development today, and yet despite the issue being highlighted time and time again over many years, the industry does continue to suffer from failure, cost and time overruns and poor quality. From high level government projects, like the NHS programme for IT and systems at HMRC, down to medium and even small scale private sector work, there are problems. The chances of a project being completed on time, on budget, fit for purpose and with high quality are far smaller than they should be when we consider the sheer quantity of new languages, tools, and working practices.

Over the years attention has turned to a number of candidates for blame:

- Requirements and designs are too complex or too simple. They are over-specified or under-specified, or may be unclear and inaccurate, and they come in late, and shift and change too much and too often.

- Code is poorly written and doesn’t follow standards. It isn’t modular enough or may be too modular. Perhaps there’s not enough object-orientation, perhaps too much, and the code can be buggy, hard to read, under- or over-commented and always insufficiently tested.

- Documentation may be absent, out of date and often incomplete. Sometimes it is excessive, excessively unclear or otherwise insufficient and inaccurate.

- A software development process can slow progress, generate too many useless documents and too few useful ones. The process in use is often good at handling either stable or changing conditions, but not both. It may involve too little or too much planning and impose excessive or insufficient controls.

To address these there have been many good and useful things that promise to help, some technical and some managerial:

- New languages that promise to eliminate bugs and raise quality.

- A zillion third-party libraries that promise to take the effort out of coding, and allow a week’s work to be done in a day.

- New all-singing and all-dancing development tools which can analyse code, eliminate bugs and support all types of refactoring.

- New capabilities in collaborative source code control systems supporting multiple and overlapping lines of development.

- We’ve also seen practices that promise to raise quality and productivity. Unit testing, pair programming, collective code ownership, code reviewing, design modelling, use cases, user stories and more.

- There have been new processes that promise to raise quality, dramatically speed development and increase agility. A tidal wave of agile methodologies from RUP (if you class it as agile, many don’t), through Lean, XP, Scrum, and more recently Kanban and half-a-dozen others.

Given the demonstrable value of many of these individual improvements, why aren’t there more successes? There are several reasons why I think we haven’t yet reached the promised land where the vast majority of software developments are an unqualified success.

Understanding and improving work

The purpose of any software development is to provide software to a group of end users to make their lives easier. In some cases those developing the software have no real understanding of the work that those end users do. A clear understanding of their work, how it flows and the needs that drive that work is essential for good requirements and subsequent good design. All too often there’s a vague set of requirements to computerise an existing manual workflow which risks exactly that: computerisation with no other improvement to how the work is done. In other cases the task may be to replace an inadequate computer system with a slightly better one, but one which still implements the same flawed approach.

I remember from my days using Lotus Domino that a designer somewhere had clearly thought that a good way to computerise a filing cabinet was to replicate the same inflexible concepts of cabinets, binders, folders and files in computer form. Perhaps they thought it would at least be easy to understand? What they achieved was all the disadvantages of the paper system plus some minimal benefits. Worse than that, the restrictive paper concepts made little sense in computer land, so they also created confusion. No-one seems to have asked the question: what are users actually trying to do?

It doesn’t matter how well written the requirements, how many focus groups or workshops, how many or few features are included or how polished the UI. None of these will make a difference if there’s a failure to understand the system of which the user is part. Consulting customer proxies and power users can hinder as well as help because they are often not representative of the main user base and as experts may have quite a different approach to the work than their peers.

The best way to get this understanding is to go there and see for oneself. It is too important to trust to a third-party who might jump straight into solution mode and deliver a specification for the wrong thing. Key staff need to go and meet users in their workplace and understand them and what they do. It is important to include developers in this, not just requirements and user-interface experts, because developers need the same understanding if they are going to model concepts accurately and produce a clean and appropriate design for a software solution.

Once there’s understanding of how the work is performed currently, careful thought needs to be given to whether the introduction of new software could and should change that work. Considering changing how users work should be an integral part of any software development process. There may well be some customers that don’t want that, but the greatest benefits of any technology lie in complementing the technology with an optimised workflow, so it is worth trying to persuade them. Without an effective and appropriate workflow even the best and most technically beautiful software may be dead on arrival.

I am suggesting that one of the principal barriers to greater success is a failure to properly understand the nature of the work that end users do, the system of which that work is part, and how that system can be changed and optimised through the introduction of new software. Think about all those useful little applications that were written by people wanting to solve their own problems. When they fully understand the nature of their problem, then they can solve it in elegant ways that dramatically improve productivity.

Misunderstandings start before we get to any detailed requirements, in the basic knowledge of the system we’re trying to improve. The first customer questions shouldn’t be ‘What do you want and when?’, they should be ‘How do you work now and what are you trying to achieve?’. We need a mechanism for understanding and mapping how people work, uncovering their needs, and a design language for workflows that allows us to design changes and predict their impact.

Trialling and measuring changes

When a change to a system is being made, there are always risks. Will the change be a change for the better? Did we really do a good job with that software and what’s the evidence for that apart from a thank you from the customer?

During the process of designing software and optimising workflow, there’ll be many ideas and possibilities. The best way to choose between alternatives is to try them out with real users in their normal work, and not just to rely on their opinions but to quantitatively measure the impact. That way good can be distinguished from bad, the whole solution can be improved, and the effect on the customer of the final solution can be estimated before most of the work is done, giving real support to the business case.

The popularity of prototyping varies, but it is rare to trial prototypes with real users and even rarer to test them with real work in a live situation, even though there are a number of ways of doing this safely, for example using the prototype in parallel with the existing solution. There’s rarely any attempt to measure the impact in quantitative terms.

The second suggestion I make is that the lack of proper trials and measurements of solution effectiveness means that even with a good understanding of how users work, poor solutions can still be delivered. We need a mechanism for defining sensible measures and trying out changes in order to make the right decisions about how software should work.

The process of developing software

When designing and building software, there needs to be a way of working that maximises the value delivered to the customer while minimising costs. It turns out that to do this well there needs to be the same kind of understanding about internal processes as for customers and how they work. This goes far beyond just considering developers and testers, it needs to include everyone who is involved in the production and maintenance of software including sales, marketing, product management, support and quality management.

How does the end-to-end software development process work in your company? How can it be improved and how can these improvements be trialled and measured? Without some way to measure improvements, ideas are sometimes tried blind in the hope that some will be winners. There may be a thousand suggestions and recommendations out there, but which ones apply in any specific situation?

A classic mistake is to presume that a software development process should be fixed and that it comes in neat off-the-shelf bundles labelled as ‘methodology X’. Without exception, every workplace has some unique needs, and though a methodology might indeed provide useful tools and ideas, maximum effectiveness demands customising the way work is done to meet the environment. This is only common sense. A software development process can slow down work in several ways. It can be so prescriptive and burdened by ceremony that it stifles through workload, or it can be so free and lightweight that it impedes progress through lack of direction, focus and ensuing re-work and waste. Agile is no silver bullet here, and while it may addresses some concerns of software developers it may not help with those of other departments [ Kelly11 ]. Indeed many of the agile methodologies have little to say about people who are not developers or testers.

My third suggestion is that a lack of understanding about the effectiveness of our own software development processes including how to measure them and safely improve them, is a major factor that gets in the way of delivering good software to customers.

Enter systems thinking

So what is needed is a solution that provides a template for working with and understanding customers and their work, analysing and improving workflows, trialling and measuring the impact of improvements, and which can also be applied into the internal processes used to create software. A solution which is lightweight, based on facts, fast, implements continuous improvement, and is low-cost and low-risk. As you might have already guessed, the process I’m going to suggest that can help with all of this is Systems Thinking.

Systems Thinking is a term that was coined in the 1950s and later grew out of the work of W. Edwards Deming whose book Out of the Crisis [Deming82] condemned the state of modern management. His ideas were taken up by Taiichi Ohno in Japan, who went on to design the Toyota Production System; a systems thinking approach to the manufacture of cars which simultaneously reduced costs and raised quality. It was the Toyota Production System that directly lead to Toyota’s growth and dominance in car manufacturing. More recently John Seddon [ Seddon ], author of Freedom from Command and Control [ Seddon03 ], has pioneered applications of the same approach to service industries and further developed it.

Systems Thinking is an approach to work that considers the whole system of which the work is a part and provides tools to map out and improve that system. This is in contrast to traditional approaches that tend to focus on micro-optimisations in particular areas. It can be applied to any organisation producing products or services, and includes tools to study and learn from demand, design measures of performance, and map out processes. It then guides the design of changes to those processes and safely trials changes using measures to prove their effectiveness before they are rolled out.

It works through a continual cycle of improvement, that can be driven at whatever speed an organisation needs, delivering low-risk incremental changes that add up to radical long-term streamlining and optimisation. It does this with staff experiencing both minimal disruption and maximal involvement in designing improvements, which has the side effect of significantly raising morale and motivation because those who do the work get to shape how the work changes.

The problem with micro-optimisations are that improvements in one area may cause bigger problems elsewhere. The improvements they appear to deliver (for example cost saving) may actually harm customers of the product or service somewhere else, leading to poorer quality, worse service and ultimately damage to reputation and lower sales. This wrong-headed strategy is something we each encounter every day. For example, I regularly have the ‘joy’ of interacting with BT’s computerised helpline, which not only forces me to laboriously beep my way through a deep hierarchical menu, but now wants me to speak my request so I can enjoy a seemingly endless tennis match of: ‘Did you say X?’, ‘No I said Y!’. Companies like BT choose to annoy their customers in the name of small cost savings, causing themselves greater costs elsewhere handling complaints, lost business and effects on reputation. If they saw the true costs, I’m sure they wouldn’t do it.

I don’t present Systems Thinking as a silver bullet. Instead, think of it as a way to craft your own personalised silver bullet of a process that ideally suits how you and your team need to work. You’ll create a flexible silver bullet that you’ll continue to polish as your circumstances change. That’s the only guarantee of success: having the knowledge, confidence and tools to tailor your approach to changing conditions.

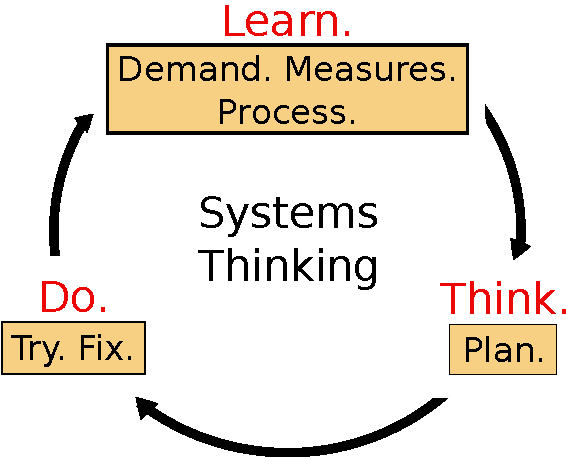

Let’s take a look at the main components of Systems Thinking, which I present through the lens of the Learn. Think. Do. improvement cycle, which I also use as a model for my coaching work. (You’ll find that Seddon calls this process Check, Plan, Do. I prefer my own terminology, but in essence it is the same idea.)

|

| Figure 1 |

You’ll notice that Systems Thinking provides a continuous cycle of improvement. There’s a learning phase, where we study and understand customer’s needs, their systems and environments (demand), define new ways to measure performance, and map out processes. This is followed by a thinking phase, where we design improvements to these processes, and a doing phase where these improvements are trailed and, if successful, made permanent. Let’s look at it in more depth, and specifically how each stage can be applied to Software Development.

Learn

The first and biggest stage is Learn. This is split into three parts: learning about demand, designing improved measures, and mapping out processes.

Throughout all of these stages, the way I recommend you work is to establish a multi-disciplinary improvement team, with representatives from every department involved in delivering your product or service, and that you include experienced front-line staff with expert knowledge of what actually happens on the ground.

Demand

In Systems Thinking, demand is the name you give to all contact from customers, whether they are for delivered products and services, support calls, complaints, enquiries, future developments, sales, or any other purpose.

There are two types of demand:

- Value demand – anything your team does that gives the customer value. This is what you’re in business for.

- Failure demand – anything you do that doesn’t give the customer value. This includes any waste or failings on your team’s part that get in the way of providing value demand.

Companies that have a high level of failure demand have high costs servicing that demand. It is quite common for failure demand to be responsible for a significant percentage of all costs. If you want to dramatically reduce costs, do it not by focussing on costs like BT has done, but by focussing on reducing failure demand.

In Software Development, examples of value demand include:

- The main software products and services you create that solve a customer’s problem and makes users lives easier.

- Any supporting products and services that you offer which contribute to solving their problems, excluding any problems you created – for example customer difficulties installing or configuring your products that leads you to sell consultancy services on product installation is an example of failure, not value.

Examples of failure demand include:

- Most customer support. This may be due to inadequate product quality, lack of fitness-for-purpose, too much complexity, lack of ease-of-use, poor documentation, or other failings.

- Most customer training. This may be due to product complexity, lack of ease-of-use or fitness-for-purpose.

- Most customisation and installation services, again due to complexity, lack of documentation or ease-of-use.

You might be surprised to see some of these on the list as ‘failure demand’. It is very easy to become so accustomed to handling failure demand that these activities become taken for granted. I’m not suggesting that you simply dispense with support and training or that they are unnecessary. What I’m saying is that perhaps you wouldn’t need to provide nearly so much of both if you considered the causes of this demand and improved your software and working practices to reduce them.

It can be tempting to look at these services as good sources of additional revenue, but in a competitive landscape burdening your customers with these extra costs will only make the competition more attractive. Look at the success of Apple and its App Store: redesigning applications to make them simple, intuitive, instantly deployable and updatable, and with minimal need for documentation or support has enabled thousands of independent developers to go solo. They are no longer burdened with the failure demand costs that in the past would have made this route impractical.

From your point of view, there are two places where value and failure demand operate:

- External demand: This is demand arising from customers external to your organisation, of which I gave some examples above.

- Internal demand: This is demand arising inside your own organisation in the service of external demand. It is the things you do, including your own internal processes, to provide your products and services.

It is desirable to maximise internal value demand and minimise internal failure demand, because that means maximum focus on serving the customer at minimum cost. Examples of internal demand will vary from business to business, but there are common indicators that I would suggest are associated with a good balance:

- Communication is clear, concise, and to the point. There’s culture of open and direct communication person-to-person rather than up and down the hierarchy.

- Meetings focus on making decisions rather than exploring options and they are short and infrequent.

- There are frequent whiteboard sessions with a range of staff from different departments, so knowledge about the whole business is spread widely and in considerable depth. Priorities are clear, long-term and rarely change.

- Everyone can contribute ideas and suggestions for improvement, no matter how junior, and there's a culture of question-asking and fact-finding before decision-making.

- Role-rotation, job-shadowing and other techniques are used to share skills and experience and reduce over-reliance on a few key individuals.

- There’s rapid learning from failure through a tight cycle of feedback and continuous improvement, and change is driven through the focus of providing customer value.

- There’s an appropriate level of formal process and internal documentation. Appropriate means it is all used, all useful, and all serves a direct customer purpose.

- Members of the core development team are in close contact with customers and regularly visit their workplace.

- Internal IT is streamlined, simple and appropriate to needs.

This is my own list and is far from an exhaustive or definitive, but it should give you some idea of how close your organisation is to serving demand well. If you have many of these, be happy; you’re probably doing quite well. If you have few or none, also be happy; there’s big scope for improvement. If you have few of these and don’t think others will let you make the changes needed to get more, then either find a way to convince them or move on. I’m a strong believer that life’s too short to let other people waste it.

Studying demand involves a lot more than just listing types. You’ll need to examine each type in detail to understand how much you have of it, where it comes from, what the underlying causes are, and how the way you work is contributing to it. Once demand is well understood, the goal is simply to maximise value demand and minimise failure demand. Some demand is predictable and some is not. Systems Thinking helps with both because enables us to optimise processes to handle predictable demand, whilst increasing flexibility to handle the unpredictable.

Studying demand can be done from both the software provider’s perspective (i.e. studying what your customers want from you), and if you are a B2B company, from your customer’s perspective (i.e. studying what your customer’s customers want from them and how you can help them provide it). So learning about demand can be used by you not only to improve your own business, but also help your customers understand their own demand and improve their business too, providing extra value to them that will help distance you from the competition.

Measures

The next step is to design some new measures (or if you prefer: metrics). We talk about measures deliberately, since they always follow events – you are measuring something that has already happened. In order to know the quantity of value demand and failure demand in your software company, you need a reliable way to measure it. Without that, how will you be sure that you’ve made any improvement?

The alternative, ‘targets’, are not a good idea since there’s no reliable way to decide what a target should be, and the presence of a target can severely limit both the ambition and scope for improvement. Ambition is limited because targets are usually set quite low, with a modest change in mind. Scope is limited because targets are often set for things that aren’t closely related to delivering value to customers, and then the goal becomes distorted into meeting the target rather than providing the best product or service.

Systems Thinking provides a way to design effective measures so that you can track current and future performance. In software some of this information can be drawn from existing failure and value demand systems – for example bug trackers, feature requests, customer support systems and sales databases. Once established measures are tracked over time to see how they are affected by changes.

All of your measures need to be relevant and important to your customers. Generic examples might include the end-to-end time it takes you to deliver new features or bug fixes, how quickly you respond to enquires, or the number of severe bugs customers detect for you. Specific examples will include measures relevant to a particular product and customer: the impact your software has on them.

None of those I’ve mentioned are ‘off-the-shelf’ measures that you should necessarily adopt. The whole point is to work out which measures would best suit you and your customers. The important point is that they must all be things that your customers care about. With a clean set of measures that directly represent how well you are providing value to your customers, you have a benchmark against which you can test any change you make to your workflow.

If your customers have their own customers, you can also help them to come up with measures that will demonstrate the value your software gives to their own customers. There’s nothing like concrete evidence of the difference you made to their bottom line to convince them to stay with you for the long term.

Process

The final step in Learn is to map out your internal workflow. I’ve been experimenting with a simple visual process language that draws out the important stages and hand-offs involved in a workflow. This picture allows everyone in your organisation to understand the whole system, explains much of the origin of your failure demand, and is also used later on in Do to design and prototype improvements.

Mapping processes is best done in collaborative face-to-face workshops using whiteboards, flip-charts, index cards, post-it notes and other low-tech tools. The workshop should include a representative of everyone from every department involved in the design and delivery of software, bringing together expertise to collectively understand how work is done. There have been reports of many surprising things learned through this approach, particularly in organisations that have separate departments with poor communication. It is also all too common for duplicate and unnecessary steps to become apparent.

This same workshop approach can then be used when working with customers to map out and understand their processes, so that you get a full understanding of their workflow before you start designing software. This avoids prematurely discussing requirements before the customer environment and specific needs are understood.

Think

The second stage is to design workflow improvements using the process maps created in Learn.

This is where planning takes place, but it is not what you might be used to as planning. Instead of big Word documents, Gantt charts, MS Project plans or similar tools, the same form of collaborative workshop is used to design changes to the process. Changes are simulated by creating new steps, removing steps, altering the flow of work, handoffs and work products that are created. Each change is then considered end-to-end and the workshop team look for possible problems or unintended consequences.

This is a form of low-cost process prototyping that flushes out the best ideas, works out their details and then stress-tests them on paper before you go near trying them out. The result of Think is one or more concrete improvements that have been analysed from the perspective of the whole system and how they will deliver customer value. Within your own software development workflow, it is likely that different development methodologies will be discussed. That’s fine, so long as you treat each one as a template rather than a prescription and are prepared to properly simulate the full consequences using your process map before adopting anything.

I don’t want to either promote or bash any specific methodologies, since they all have strengths and weaknesses, and often contain very useful tools. Methodologies generally split into high-discipline and low-discipline groups. High-discipline methodologies come with an array of constraints that will impact your business elsewhere. Whether the impact is positive or negative you’ll have to decide. Examples include Waterfall, XP and Scrum.

Although I am far from a fan, Waterfall can work in some situations, with simple and clear requirements and short projects. XP contains a tightly-prescriptive set of practices which also dictate how you approach requirements, how you deal with your customers, and changes you’ll need to make in your working environment and support systems. If you’re willing to change your business around XP and XP fits (once you’ve studied and understood your process and the impact it will have), then go for it. Just make sure all the stakeholders are on board with that decision, not just the development team. Scrum is less prescriptive than XP, but will force you to organise your business around set-length sprints. Again, so long as you are happy with the consequences, and everyone in the business can buy into the sprint cycle, then go for it.

Examples of low-discipline methodologies include Lean and Kanban. These are mostly concerned with promoting continual flow and improvement and in general they are more flexible than the high-discipline alternatives. However, precisely because they are so simple, you’ll need to design a layer on top of your own processes to create a full recipe for how your team develops software. So low-discipline does require more work on your part, albeit for a more bespoke result.

I find methodology is much less important than people usually suppose, and the best methodology in the world will still lead you to failure if you are only thinking about the mechanics of writing software and not the wider context of its usage.

The goal of Plan is not to re-write your process or procedure documents. That’s something that you should only do when you are ready to make a change permanent in Do. Plan is all about designing the best process you can to deliver software.

You might require a special permit to do so, but you probably don’t want to be discussing issues of process compliance or standardisation at this stage, and it may be best to keep those guys out of the room. That’s because Plan is about exploring options and compliance people tend to be better at critiquing detail. Obviously if do you have a good idea what they need, then you can consider it now. They do need to become involved at some point as usually there’s an important business reason behind the compliance function. But there’ll be time for that later when you have both specific changes and a strong line of reasoning about how those changes are the right thing to do.

With a customer’s workflow, you adopt the same approach. Having mapped out their workflow with them, work alongside them to see how it can be improved. Do this before you start thinking about the software. Just focus on what the users are trying to do and how that can best be done. When you are both comfortable with the new workflow, then starting thinking about what the software will need to do. Resist jumping into features and benefits too early. The goal isn’t to find everything your software could possibly do. It is to streamline the customer’s workflow and only then create the minimal amount of software that is needed to make that flow run smoothly. You’ll end up writing less software that is a better fit. Your costs will go down and your customers will get a cheaper and better result more quickly.

Do

The final stage in the improvement cycle is Do. Here you get to try out changes and make them permanent.

The traditional idea of special ‘change’ projects is an unhelpful one, because it pretends that change should be something infrequent and separate from normal work, rather than constant and an integral part of a team’s DNA. Treating change as a project can actively inhibit progress. It needs to be small, incremental and continual. Why wait until the end of a project to learn?

Try

The Try step is all about testing the water with minimal costs. Rather than simply take your best ideas from Plan and hope they work, Try allows you to gather evidence and refine or reject them, reducing your risks and saving on the costs of change. In Try, you use your improvement team to identify the best way to try out a change, with minimal costs. This can involve a temporary paper or card-based solution to allow the new method to be tested and refined.

Software people usually hate this, because their whole job is to create computerised solutions, but the important point to remember is that you need the ability to adapt and refine your solution in real-time, and you are very unlikely to have the time and the money needed to develop software prototypes that will quickly be superseded. Try needs to be cheap, simple and fast.

Changes in your own workflow are trialled for however long it takes for the improvement to show up in your measures, perhaps as a reduction in internal bug counts or faster turnaround times for new features. If no improvement shows up, then you need to have the courage to reject the change and go back to the drawing board. Either it hasn’t worked or your measures were not good enough to detect the improvement. If you suspect the latter, go back and improve your measures before going any further.

If you want to create the best software for your customers, you need to trial it with them too. This means much more than just throwing a Beta over the wall. You need to sit with customer users and watch them use it and learn how how to improve it as a result. Best of all is to wait for trailed improvements to show up in their measures so that you have concrete evidence you're on the right track.

Note that sometimes for whatever reason, it isn’t possible to do Try. Perhaps the cost would be too great or there isn’t enough time. That’s ok, you can move straight from plan to Fix, just be aware that you are putting a lot of pressure on your planning being correct and it might not be. Seddon doesn’t talk about a separate Try step, but I think it makes sense as a way to limit risk, so long as the circumstances permit it.

Fix

This is all about making changes permanent, having become convinced of their effectiveness in Try (or Plan if you are skipping Try).

When it comes to your process and procedure documentation, this is the time to draw new versions because you have refined your workflow changes and they are ready to go live. It is also the time to fully engage your compliance people (if you are lucky enough to have them!) and start the discussion on how to demonstrate compliance with ISO9001, CMM, TickIT or anything else that you subscribe to. Make sure that you only look to provide evidence to demonstrate compliance and do not end up changing your workflow purely in response to compliance demands. If you do decide to tweak your changes in any significant way, go straight back to Plan, properly consider the change, trial the new version again in Try and only then go forward.

If your compliance people insist on a specific change, remind them that their industry is always claiming that they neither prescribe or proscribe how you work, and ask them to refer you directly to the rule in the standard that they are concerned about. Read the rule, work out how to provide evidence for it and then show them both the rule and your justification of how your change meets it.

When it comes to supporting IT systems that might need to be replaced or changed, software companies have a big advantage over others as they have the option to roll their own. I always recommend seriously considering this, because you can create a streamlined software solution that precisely meets what you need, thus minimising sources of internal failure demand. Though it might be tempting to use an off-the-shelf product, they are often so feature-full and generic that they do far too much, are difficult to configure, and the high price that they justify through the feature set is out of proportion to the small number of things you actually want to use. Of course, there are times when it makes sense to buy off-the-shelf; source-code control systems are a good example (and the best of these are free in my experience), because it would be impractical to write your own.

Having completed Do, you then embark on a new cycle of Learn, to further refine your understanding of demand, measures and process, and to enable you to respond to changes in your marketplace. If you really want to deliver the best software you can to your customers, you’ll build a long-term relationship with them and keep going through cycles of improvement together.

The cycle repeats over and over again, leading you to dramatic long-term improvements with low-risk, reducing costs and raised morale. That’s the promise of Systems Thinking.

Getting started

I promised in the introduction that I’d say more on how to get started with Systems Thinking, particularly if you work somewhere where how the work is done is largely not under your control.

You could apply it to a single product or project, properly including customer end users as a limited experiment in trialling new working practices. Or you could apply it within a single department or team, so instead of considering the whole of your organisation, your customers become the other departments or groups that need things from you. You would go through the same process of studying your demand, defining useful measures of your work’s quality and productivity in terms of other team's needs, mapping your processes and trialling and improving them. While this won’t put you closer in touch with end users, you could use it to build a documented track-record of internal success and then use that to raise awareness and gain buy-in to take it further.

You could apply it within a team, perhaps just with developers. Now perhaps your customers are the project manager and QA and the things you measure will be the things they care about. If you really have little autonomy, you could apply to yourself personally and look at how you might both measure and improve how you do your work. If you build a body of evidence from this, that could be quite persuasive at your next appraisal both for your own advancement and for Systems Thinking.

Measures are a great way to get support for change. If you can devise better measures and go and gather the data, you can use that as evidence to persuade other people that your organisation needs to consider a better way to work. Then you could be like Jon Stegner [ Heath10 ], who wanted to change his manufacturing organisation’s purchasing approach. Rather than write a presentation on how purchasing could be improved, he instead looked for evidence of the problem and simply presented his management team with a table containing a huge pile of the 424 different types of glove that various departments purchased, each tagged with its price ranging from $5 to $17. Such an immediate and visual demonstration of inefficiency convinced them change was needed in a heartbeat.

Conclusion

In this article, I’ve briefly outlined what I see as some problems in today’s software development and explained at a high-level my understanding of how Systems Thinking can help. I’ve not had time here to go into any great detail but I hope you’ve seen enough to at least start considering the possibility of this approach. I believe that Systems Thinking has the potential to change the landscape, and I urge you to find out more and explore. My knowledge of this field is far from complete and I’d welcome any opportunity to hear about your explorations and build a body of experience and evidence to share with others.

Wishing you well with your adventures in software,

Tom.

References

[Deming82] Deming, W. Edwards (1982) Out of the Crisis .Cambridge, Mass: MIT Press.

[Heath10] Heath, Chip & Dan (2010) Switch: How to change things when change is hard . New York: Random House.

[ Kelly11] Alan Kelly wrote about a framework for agile requirements management in Overload 101, in his article ‘The Agile 10 Steps Model’.

[Seddon] John Seddon’s organisation Vanguard Consulting Ltd has an authoritative website on systems thinking containing many useful resources and references to further reading: www.systemsthinking.co.uk

[Seddon03] Seddon, John (2003) Freedom from Command and Control . Buckingham, UK: Moreton Press.