Should you target 32 or 64 bits? Sergey Ignatchenko and Dmytro Ivanchykhin consider the costs and benefits.

Disclaimer: as usual, the opinions within this article are those of ‘No Bugs’ Bunny, and do not necessarily coincide with the opinions of the translators and editors. Please also keep in mind that translation difficulties from Lapine (like those described in [ Loganberry04 ]) might have prevented an exact translation. In addition, we expressly disclaim all responsibility from any action or inaction resulting from reading this article. All mentions of ‘my’, ‘I’, etc. in the text below belong to ‘No Bugs’ Bunny and to nobody else.

64-bit systems are becoming more and more ubiquitous these days. Not only are servers and PCs 64-bit now, but the most recent Apple A7 CPU (as used in the iPhone 5s) is 64-bit too, with the Qualcomm 6xx to follow suit [ Techcrunch14 ].

On the other hand, all the common 64-bit CPUs and OSs also support running 32-bit applications. This leads us to the question: for 64-bit OS, should I write an application as a native 64-bit one, or is 32-bit good enough? Of course, there is a swarm of developers thinking along the lines of ‘bigger is always better’ – but in practice it is not always the case. In fact, it has been shown many times that this sort of simplistic approach is often outright misleading – well-known examples include 14" LCDs having a larger viewable area of the screen than 15" CRTs; RAID-2 to RAID-4 being no better compared to RAID-1 (and even RAID-5-vs-RAID-1 is a choice depending on project specifics); and having 41 megapixels in a cellphone camera being quite different from even 10 megapixels in a DSLR despite all the improvements in cellphone cameras [ DPReview14 ]. So, let us see what is the price of going 64-bit (ignoring the migration costs, which can easily be prohibitive, but are outside of scope of this article).

Amount of memory supported (pro 64-bit)

With memory, everything is simple – if your application needs more than roughly 2G–4G RAM, re-compile as 64-bit for a 64-bit OS. However, the number of applications that genuinely needs this amount of RAM is not that high.

Performance – 64-bit arithmetic (pro 64-bit)

The next thing to consider is if your application intensively uses 64-bit (or larger) arithmetic. If it does, it is likely that your application will get a performance boost from being 64-bit at least on x64 architectures (e.g. x86-64 and AMD64). The reason for this is that if you compile an application as 32-bit x86, it gets restricted to the x86 instruction set and this doesn’t use operations for 64-bit arithmetic even if the processor you’re running on is a 64-bit one.

For example, I’ve measured (on the same machine and within the same 64-bit OS) the performance of OpenSSL’s implementation of RSA, and observed that the 64-bit executable had an advantage of approx. 2x (for RSA-512) to approx. 4x (for RSA-4096) over the 32-bit executable. It is worth noting though that performance here is all about manipulating big numbers, and the advantage of 64-bit arithmetic manifests itself very strongly there, so this should be seen as one extreme example of the advantages of 64-bit executables due to 64-bit arithmetic.

Performance – number of registers (pro 64-bit)

For x64, the number of general purpose registers has been increased compared to x86, from 8 registers to 16. For many computation-intensive applications this may provide a noticeable speed improvement.

For ARM the situation is a bit more complicated. While its 64-bit has almost twice as many general-purpose registers than 32-bit (31 vs 16), there is a somewhat educated guess (based on informal observations of typical code complexity and the number of registers which may be efficiently utilized for such code) that the advantage of doubling 8 registers (as applies to moving from x86 to x64) will be in most cases significantly higher than that gained from doubling 16 registers (moving from 32-bit ARM to 64-bit ARM).

Amount of memory used (pro 32-bit)

With all the benefits of 64-bit platforms outlined above, an obvious question arises: why not simply re-compile everything to 64-bit and forget about 32-bit on 64-bit platforms once and for all? The answer is that with 64-bit, every pointer inevitably takes 8 bytes against 4 bytes with 32-bit, which has its costs. But how much negative effect can it cause in practice?

Impact on performance – worst case is very bad for 64-bit

To investigate, I wrote a simple program that chooses a number N and creates a set populated with the numbers 0 to N -1, and then benchmarked the following fragment of code:

std::set<int> s;

...

int dummyCtr = 0;

int val;

for (j = 0; j < N * 10; ++j)

{

val = (((rand() << 12) + rand())

<< 12) + rand();

val %= N;

dummyCtr += (s.find(val) != s.end());

}

When running such a program with gradually increasing N , there will be a point when the program will take all available RAM, and will go swapping, causing extreme performance degradation (in my case, it was up to 6400x degradation, but your mileage may vary; what is clear, though, is that in any case it is expected to be 2 to 4 orders of magnitude).

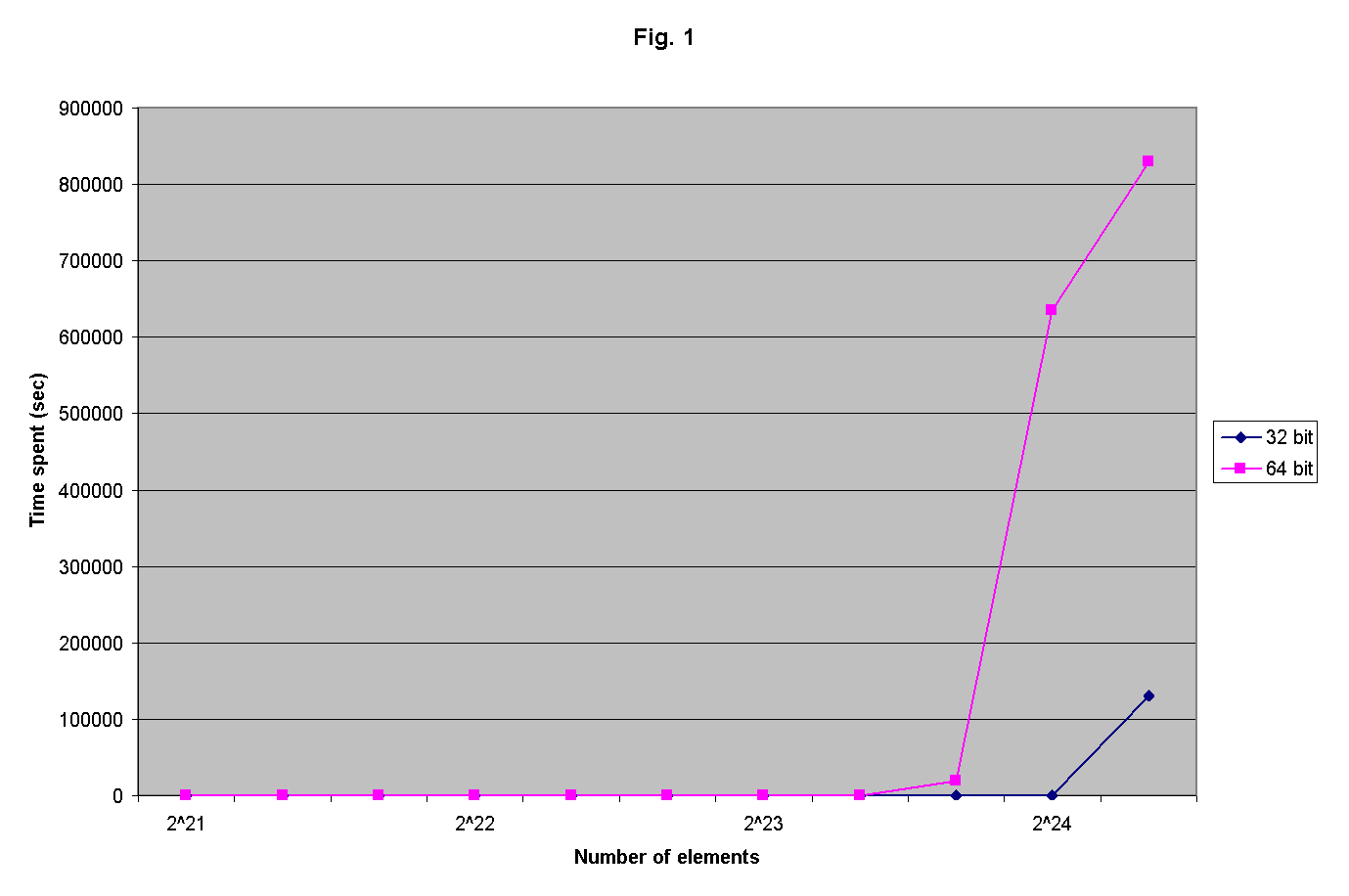

I ran the program above (both 32-bit and 64-bit versions) on a 64-bit machine with 1G RAM available, with the results shown on Figure 1.

|

| Figure 1 |

It is obvious that for N between 2 24-⅓ and 2 24+⅓ (that is, roughly, between 13,000,000 and 21,000,000), 32-bit application works about 1000x faster. A pretty bad result for a 64-bit program.

The reason for such behavior is rather obvious:

set<int>

is normally implemented as a tree, with each node of the tree

1

containing an

int

, two

bool

s, and 3 pointers; for 32-bit application it makes each node use 4+4+3*4=20 bytes, and for 64-bit one each node uses 4+4+3*8=32 bytes, or about 1.6 times more. With the amount of physical RAM being the same for 32-bit and 64-bit programs, the number of nodes that can fit in memory for the 64-bit application is expected to be 1.6x smaller than that for the 32-bit application, which roughly corresponds to what we observed on the graph – the ratio of 21,000,000 and 13,000,000 observed in the experiment is indeed very close to the ratio between 32 and 20 bytes.

One may argue that nobody uses a 64-bit OS with a mere 1G RAM; while this is correct, I should mention that [almost] nobody uses a 64-bit OS with one single executable running, and that in fact, if an application uses 1.6x more RAM merely because it was recompiled to 64-bit without giving it a thought, it is a resource hog for no real reason. Another way of seeing it is that if all applications exhibit the same behaviour then the total amount of RAM consumed may increase significantly, which will lead to greatly increased amount of swapping and poorer performance for the end-user.

Impact on performance – caches

The effects of increased RAM usage are not limited to extreme cases of swapping. A similar effect (though with a significantly smaller performance hit) can be observed on the boundary of L3 cache. To demonstrate it, I made another experiment. This program:

- chooses a number N

-

creates a

list<int>of size of N , with the elements of the list randomized in memory (as it would look after long history of random inserts/erases) - benchmarks the following piece of code:

std::list<int>lst;

...

int dummyCtr = 0;

int stepTotal = 10000000;

int stepCnt = 0;

for (i = 0;; ++i)

{

std::list <int>::iterator lst_Iter =

lst.begin();

for (; lst_Iter != lst.end(); ++lst_Iter)

dummyCtr += *lst_Iter;

stepCnt += Total;

if (stepCnt > stepTotal)

break;

}

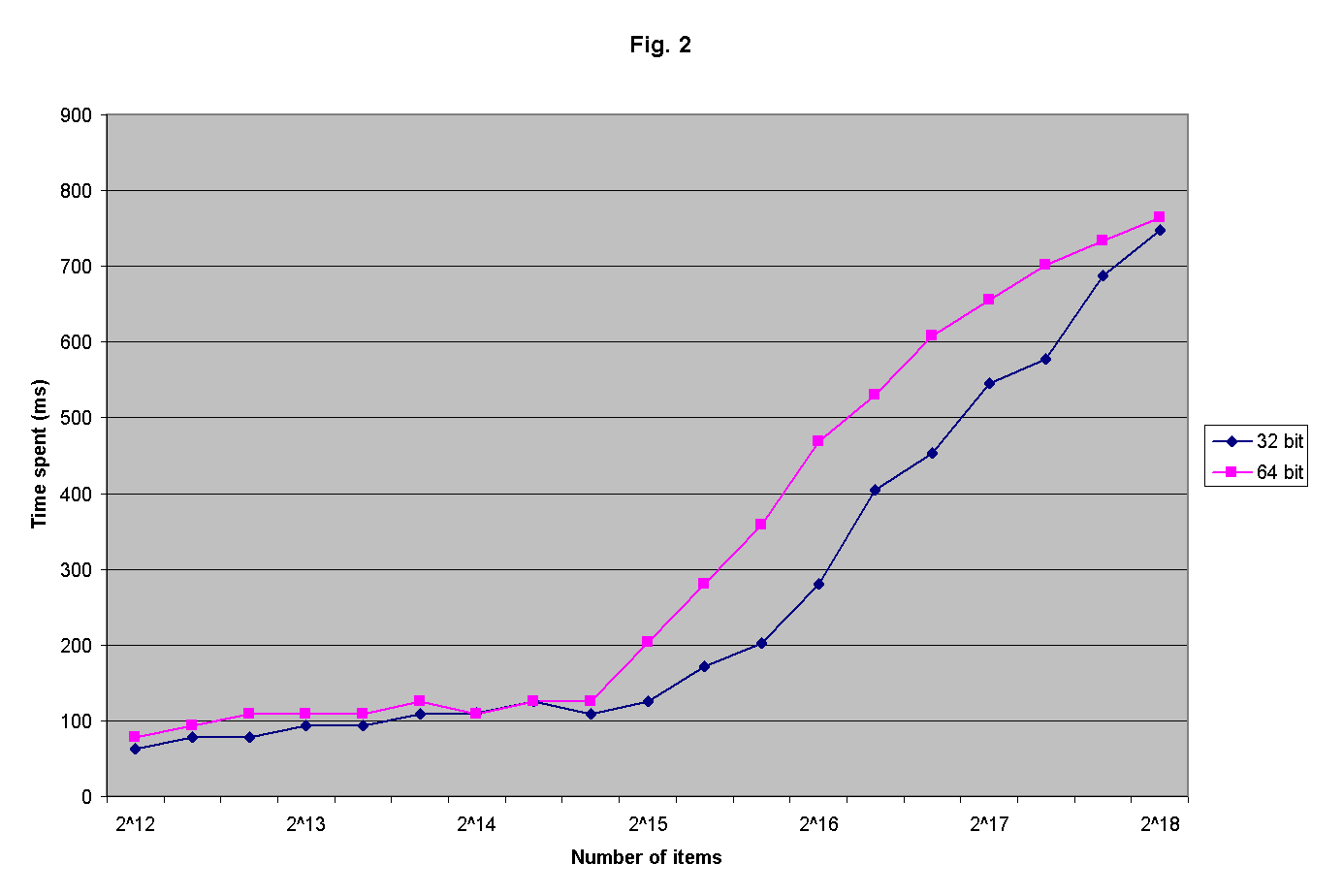

With this program, I got the results which are shown in Figure 2 for my test system with 3MB of L3 cache.

|

| Figure 2 |

As it can be seen, the effect is similar to that with swapping, but is significantly less prominent (the greatest difference in the ‘window’ from N =2 15 to N =2 18 is mere 1.77x with the average of 1.4).

Impact on performance – memory accesses in general

One more thing which can be observed from the graph in Figure 2 is that performance of the 64-bit memory-intensive application in my experiments tends to be worse than that of the 32-bit one (by approx. 10-20%), even if both applications do fit within the cache (or if neither fit). At this point, I tend to attribute this effect to the more intensive usage by 64-bit application of lower-level caches (L1/L2, and other stuff like instruction caches and/or TLB may also be involved), though I admit this is more of a guess now.

Conclusion

As it should be fairly obvious from the above, I suggest to avoid ‘automatically’ recompiling to 64-bit without significant reasons to do it. So, if you need more than 2–4G RAM, or if you have lots of computational stuff, or if you have benchmarked your application and found that it performs better with 64-bit – by all means, recompile to 64 bits and forget about 32 bits. However, there are cases (especially with memory-intensive apps with complicated data structures and lots of indirections), where move to 64 bits can make your application slower (in extreme cases, orders of magnitude slower).

References

[Loganberry04] David ‘Loganberry’, Frithaes! – an Introduction to Colloquial Lapine!, http://bitsnbobstones.watershipdown.org/lapine/overview.html

[Techcrunch14] John Biggs, Qualcomm Announces 64-Bit Snapdragon Chips With Integrated LTE, http://techcrunch.com/2014/02/24/qualcomm-announces-64-bit-snapdragon-chips-with-integrated-lte/

[DPReview14] Dean Holland. Smartphones versus DSLRs versus film: A look at how far we’ve come. http://connect.dpreview.com/post/5533410947/smartphones-versus-dslr-versus-film?page=4

Acknowledgement

Cartoon by Sergey Gordeev from Gordeev Animation Graphics, Prague.