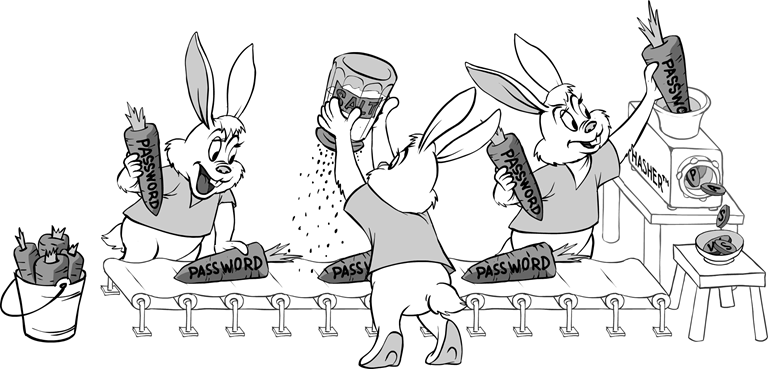

Password hashing is important. Sergey Ignatchenko explains how to do it properly.

Disclaimer: as usual, the opinions within this article are those of ‘No Bugs’ Hare, and do not necessarily coincide with the opinions of the translators and Overload editors; also, please keep in mind that translation difficulties from Lapine (like those described in [ Loganberry04 ]) might have prevented an exact translation. In addition, the translator and Overload expressly disclaim all responsibility from any action or inaction resulting from reading this article.

Password hashing is a non-trivial topic, which has recently become quite popular. While it is certainly not the only thing which you need to do make your network app secure, it is one of those security measures every security-conscious developer should implement. In this article, we’ll discuss what it is all about, why hash functions need to be slow, and how password hashing needs to be implemented in your applications.

What is it all about?

Whenever we’re speaking about security, there is always the question: what exactly is the threat we’re trying to protect ourselves from? For password hashing, the answer is very unpleasant: we’re trying to mitigate the consequences arising from stealing the whole of your site’s password database. This is usually accompanied by the potential for stealing pretty much any other data in your database, and represents the Ultimate Nightmare of any real-world security person.

Some (including myself) will argue that such mitigation is akin to locking the stable door after the horse has bolted, and that security efforts should be directed towards preventing the database-stealing from happening in the first place. While I certainly agree with this line of argument, on the other hand implementing password hashing is so simple and takes so little time (that is, if you designed for it from the very beginning) that it is simply imprudent not to implement it. Not to mention that if you’re not doing password hashing, everybody (your boss and any code reviewers/auditors included) will say, “Oh, you don’t do password hashing, which is The Second Most Important Security Feature In The Universe (after encryption, of course).”

The most important thing, however, is not to forget about a dozen other security-related features which also need to be implemented (such as TLS encryption, not allowing passwords which are listed in well-known password dictionaries, limits on login rate, etc. etc. – see ‘Bottom Line’ section below for some of these).

Attack on non-hashed passwords

So, we’re considering the scenario where the attacker has got your password database (DB). What can he do with it? In fact, all the relevant attacks (at least those I know about) are related to recovering a user’s password, which allows impersonation of the user. Subtle variations of the attack include such things as being able to recover any password (phishing for passwords), or to being able to recover one specific password (for example, an admin’s password).

If your DB stores your passwords in plain text, then the game is over – all the passwords are already available to the attacker, so he can impersonate each and every user. Pretty bad.

Attempt #1: Simple hashing

You may say, “Hey, let’s hash it with SHA256 (SHA-3, whatever-else secure hash algorithm), and the problem is gone!”, and you will be on the way to solving the problem. However, it is more complicated than that.

Let’s consider in more detail the scenario when you’re storing passwords in a form of

P'=SHA256(P) (*)

where P is user-password, and P' is password-stored-in-the-database.

Dictionary attacks

So, an attacker has got your password DB, where all the passwords are simply hashed with SHA256 (or any other fast hash function), as described above in formula (*). What can he do with the database?

First of all, he can try to get a dictionary of popular passwords, and – for each such dictionary password – to hash it with SHA256 and to try matching it with all the records in your database (this is known as ‘dictionary attack’). Note that using simple P'=SHA256(P) means that the same P will have the same P' , i.e. that the same passwords will stay the same after hashing .

This observation allows the attacker to pre-calculate SHA256 hashes for all the popular passwords once, and then compare them to all the records in your DB (or any other DB which uses the same simple hashing). While this kind of attack is certainly much more difficult than just taking an unhashed password, and therefore simple hashing is clearly better than not hashing passwords at all, there is still a lot of room for improvement.

Attempt #2: Salted hash

To deal with the issue when the hash is the same for the same password (which allows the pre-calculation for a dictionary attack, and also allows some other pre-calculation attacks, briefly mentioned below), so-called ‘salted hashes’ are commonly used.

The idea is the following: in addition to P' (user password – password-stored-in-the-database), for each user we’re storing S – so-called ‘salt’. Whenever we need to store the password, we calculate

P'=SHA256(S||P)

where || denotes concatenation (as string/data block concatenation).

As long as S is different for each user, the hashes will be different for different users, even if their passwords are exactly the same. This, in turn, means that pre-calculation for a dictionary attack won’t work, making the life of an attacker significantly more complicated (at virtually no cost to us). In fact, ‘salted hashes’ as described above, defeat quite a few other flavours of pre-calculation attacks, including so-called ‘rainbow table’ attacks.

The next practical question is what to use as salt. First of all, S must be unique for each user. Being statistically unique (i.e. having collisions between salts for different users very unlikely) is also acceptable; it means that if you’re using random salts of sufficient length, you’re not required to check the salt for uniqueness.

Traditionally (see, for example, [

CrackStation

]), it is recommended that S should be a crypto-quality random number of at least 128 bit length, usually stored alongside the password in the user DB. And crypto-quality random numbers can be easily obtained from

/dev/urandom/

on most Linux systems, and by using something like

CryptGenRandom()

on Windows.

Note that, despite a very confusing wording on this subject in [

CppReference

], none of the C++11 random number engines (LCG, Mersenne-Twister, or Lagged Fibonacci) is considered good enough for cryptographic purposes – in short, they’re way too predictable and can be broken by a determined attacker, given enough output has leaked. Overall, random number generation for security purposes is a very complicated subject, and goes far beyond the scope of this article, but currently the safest bet in this regard is to use Schneier and Ferguson’s [

Fortuna

] generator with OS events fed to it as entropy input (this is what is implemented in most Linuxes for

/dev/urandom/

), or (if you do not have the luxury of having entropy on the go, but can get a cryptographic-quality seed), the so-called Blum-Blum-Shub generator.

However, some people (such as [ Contini ]) argue that using a concatenation of a supposedly unique site-ID with a unique user-ID (which is already available within the database) as S, is about as good as using crypto-random S. While I tend to agree with Contini’s arguments in this regard, I still prefer to play it safe and use crypto-random S as long as it is easy to do so. At the very least, the ‘playing it safe’ approach will save you time if/when you need to go through a security review/audit, because you won’t need to argue about using not-so-standard stuff (which is always a pain in the neck).

So, the suggested solution with regard to server-side salting is the following:

- to store S for each user in the same DB (there is no need to encrypt S, it can be stored as a plain text)

-

whenever a password needs to be stored for user P, S (of at least 128-bit length) is taken from

/dev/urandom 1or fromCryptGenRandom() - store a (S,P') pair for each user, calculated as P'=SHA256(S||P), where || denotes concatenation. P must never be stored in the DB.

As discussed above, this approach is much more solid than simple hashing, but... there is still a caveat.

Prohibition on passwords in known dictionaries

Some may ask: “Hey, why bother with salting if we can simply prohibit users from using passwords from known dictionaries?” The answer to this question is the following:

You do need both to prohibit passwords from known dictionaries and to use salt as described above.

Prohibiting dictionary-passwords is necessary even if passwords are ‘salted’, because dictionary attack is still possible; if the attacker looks for one single password, he can still run the whole dictionary against this specific password, whether it is salted or not (what the salt does is increase many-fold the cost of ‘phishing’ of any password out of DB).

Salt is necessary even if passwords from dictionaries are prohibited, because besides a dictionary pre-computation attack, there is a whole class of pre-computation attacks, including ‘rainbow table’-based attacks. The idea behind pre-computed ‘rainbow tables’ is not trivial, and is beyond the scope of this article (those interested may look at [ WikiRainbow ]), but it is prevented by ‘salting’ in a pretty much the same way as a pre-computed dictionary attack is.

Offline brute-force attacks on fast hash functions

Even after we have added ‘salt’ to our P' as described above, and prohibited dictionary passwords, there is still a possible attack on our password DB. :(

This attack is known as an offline brute-force attack, wherein an attacker has the whole password DB; it is different from a online brute-force attack, when an attacker simply attempts to login repeatedly (and which can and should be addressed by enforcing a login rate limit).

To mount an offline brute-force attack, the attacker needs to have the password DB (or at least one entry out of it, including the password and salt). Then the attacker may simply take this password and salt, and run all possible password variations through our SHA256(S||P) construct; as soon as SHA256(S||attempted-P) matches P' – bingo! attempted-P is the real password P for this user. 2

Brute-force attacks, such as the one described above, are practical only if the number of possible passwords is quite small. If we had 2 256 (or even a measly 2 128 ) different passwords for the attacker to analyze, a brute-force attack wouldn’t be feasible at all (i.e. all the computers currently available on the Earth wouldn’t be able to crack it until the Sun reaches the end of its lifetime). 3

However, the number of possible passwords (known as the ‘size of search space’) is relatively low, which opens the door for a brute-force attack. If we consider a search space consisting of all 8-character passwords, then (assuming that both-case letters and digits are possible), we’ll get (26+26+10) 8 ~=2.2e14 potential passwords to try. While this might seem a large enough number, it is not.

Modern GPUs are notoriously good in calculating hashes; also note that the search task is inherently trivial to parallelise. In practice, it has been reported that on a single stock GPU the number of SHA256’s calculated per second is of the order of 1e9 [ HashCat ]. It means that to try all the 8-character passwords within our 2.2e14 search space (and therefore, to get an 8-character password for a single user for sure), it will take only about 2.5 days on a single stock GPU. :( As mentioned in [ SgtMaj ], this means that the upper-bound of the cost of breaking the password is mere $39. This is despite having used an industry-standard (and supposedly unbreakable) hash function, and despite the whole thing being salted. :(

Note that the attack above doesn’t depend on the nature of the hashing function. The attack doesn’t depend on any vulnerability in SHA256; the only thing which the attack relies on is that SHA256 is a reasonably fast hash function .

Mitigation #1: Enforce long passwords

What can be done about these brute-force attacks? Two approaches are known in this field. The first approach is to enforce a minimum password length of longer than 8. This can be illustrated by Table 1, which shows that if we can enforce all users having relatively long passwords (at least 10–12 characters depending on the value of the information we’re trying to protect), we might be OK. However, with users being notoriously reluctant to remember passwords which are longer than 8 characters, this might be infeasible; moreover, with the power of computers still growing pretty much exponentially, soon we’d need to increase the password length even more, causing even more frustration for users. :(

|

||||||||||||||||||||||||

| Table 1 |

Going beyond a password length of 12 isn’t currently worthwhile; IMNSHO (in my not-so-humble opinion), any security professional who is trying to protect information which is worth spending half a billion to get with a mere password (i.e.without so-called ‘two-factor authentication’) should be fired on the spot.

Mitigation #2: Use intentionally slow hash functions

As noted above, to mount a brute-force attack, an attacker needs our hash function to be reasonably fast. If the hash function is, say, 100,000 times slower than SHA256, then the attack costs for the attacker go up 100,000-fold.

That’s exactly what people are commonly doing to protect themselves from a brute-force attack on a stolen password DB – they’re using hash functions which are intentionally slow .

Several intentionally slow hash functions have been developed for exactly this purpose, with the most popular ones being PBKDF2, bcrypt, and (more recently) scrypt. As of now (mid-2015), I would suggest scrypt – which, in addition to being intentionally slow, is specially designed to use quite a lot of RAM and to run quite poorly on GPUs while being not-so-bad for CPUs – or PBKDF2, if you need to keep your crypto NIST-standardized.

All such intentionally slow functions will have some kind of parameter(s) to indicate how slow you want your function to be (in the sense of ‘how many computations it needs to perform’). Using these functions makes sense only if the requested number of computations is reasonably high.

The next obvious question is, ‘Well, how big is this ‘reasonably high’ number of calculations?’ The answer, as of now, is quite frustrating: ‘as high as you can afford without overloading your server’. :(

Note that when choosing load parameters for your intentionally slow hash function, you need to account for the worst-possible case. As noted in [ SgtMaj ], in many cases with an installable client-app (rather than client-browser) this worst-case scenario happens when you’ve got a massive disconnect of all your users, with a subsequent massive reconnect. In this case, if you’ve got 50,000 users per server, the load caused by intentionally slow hash functions can be quite high, and may significantly slow down the rate with which you’re admitting your users back. 4

Mitigation-for-mitigation #2.1: Client + Server hashing

To mitigate this server-overload in case of massive reconnects, several solutions (known as ‘server relief’) have been proposed. Most of these solutions (such as [ Catena ]), however, imply using a new crypto-primitive 5 , which is not exactly practical for application development (that is, until such primitives are implemented in a reputable crypto library).

One very simple but (supposedly) efficient solution is to combine both client-side and server-side hashing. This approach, AFAIK, was first described in a StackExchange question [ paj28 ], with an analysis provided in [ SgtMaj ].

Client + Server hashing

The ‘Client + Server’ password hashing schema works as follows:

- User enters password P

-

P' is calculated (still on the client) as:

client_slow_hash(SiteID||UserID||P)

whereSiteIDis unique per-site string,UserIDis the same ID which is used for logging in,||denotes concatenation, andclient_slow_hashis any of the intentionally slow hash functions described above. - P' is transferred over the wire

-

on the server side, P'' is calculated as:

server_slow_hash(S||P')

whereserver_slow_hashmay be either the same as or different fromclient_slow_hash, andSis a crypto-random salt stored within user DB for each user. - P'' is compared to P'' stored within DB. P' is never stored in database.

This approach shifts some of the server load to the client. While you still need to have both of your hash functions as slow as feasible, Client + Server hashing (when you have an installable client app rather than a browser-based app) may allow an increase from 10x to 100x of the brute-force-attack-cost-for-the-attacker [ SgtMaj ], which is not that small an improvement security-wise.

Note that while this Client + Server hashing might seem to go against the ‘no-double hashing’ recommendation in [ Crackstation ], in fact it is not: with Client + Server it is not about creating our own crypto-primitive (which is discouraged by Crackstation, and for a good reason), but rather about providing ‘server relief’ at minimal cost (and re-using existing crypto-primitives).

On the other hand (unless/until [ WebCrypto ] is accepted and widely implemented), this Client + Server hashing won’t be helpful for browser-based apps; the reason for this is simple – any purely Javascript-based crypto would be way too slow to create enough additional load to bother the attacker.

| WebCrypto |

|

WebCrypto, more formally Web Cryptography API, is a W3C candidate recommendation, essentially aiming to provide access from JavaScript to fast browser-implemented crypto-primitives. |

What MIGHT happen in the future

In the future, things might change. Very recently, a ‘Password Hashing Competition’ has been held [ PHC ], looking for new crypto-primitives which allow for better ways of password hashing; while they don’t seem to apply any magic (so being intentionally slow will still be a requirement for them), there is a chance that one of them will become a standard (and becomes implemented by the crypto-library-you’re-using) sooner or later. When/if it happens, most likely it will be better to use this new standard mechanism.

Bottom line

As long as a new standard for password-hashing is not here yet, we (as app developers) need to use those crypto-primitives we already have. Fortunately, it is possible and is reasonably secure using the approaches described above.

When implementing login for an installable client-app, I would suggest to do the following:

- Encrypt the whole communication with your client. If you’re communicate using TCP, use TLS; if you’re communicating using UDP, use DTLS; for further details see [ NoBugs ]. Sending password over an unprotected connection is something you should never do, NEVER EVER.

-

Implement Client + Server Hashing as described above, configuring both client-side and server-side functions to be as slow as feasible

- As of now, scrypt is recommended to be used on both client-side and server-side; if following NIST standards is a requirement, PBKDF2 is recommended.

- For the client side, load parameters which are based on the maximum-allowable delay for the slowest-supported client hardware should be used.

- For the server side, load parameters which are based on the maximum-allowable delay in the worst-possible-case (for example, in case of massive reconnect if applicable) for the server-hardware-currently-in-use.

- Set the minimum password length to at least 8

- Allow a maximum password length of at least 12, preferably 16

- Prohibit passwords which are in well-known password databases (and enforce this prohibition)

- Enforce password changes (which will be a separate and quite painful story)

- Do think how you will provide ‘password recovery’ when you’re asked about it (and you will, there is absolutely no doubt about it). While ‘password recovery’ is a fallacy from a security point of view, there is 99% chance that you will be forced to do it anyway, so at least try to avoid the most heinous things such as sending password over e-mail (and if you cannot avoid it due to ‘overriding business considerations’, at the least limit the password validity time slot, and enforce that the user changes such a password as soon as she logs in for the first time).

- Implement two-factor authentication at least for privileged users, such as admins.

-

Implement a login rate limit (to prevent online brute-force attacks)

- With the precautions listed above, pretty much any reasonable limit will protect from brute-force as such (even limiting logins from the same user to once per 1 second will do the trick).

- On the other hand, to avoid one user attacking another one in a DoS manner, it is better to have two limits: one being a global limit, and this one can be, say, one login per second. The second limit may be a per-user-per-IP limit, and this needs to be higher than the first one (and also may grow as number of unsuccessful attempts increases). With these two limits in place, the whole schema will be quite difficult to DoS.

Phew, this is quite a long list, but unfortunately these are the minimum things which you MUST do if you want to provide your users with the (questionable) convenience of using passwords. Of course, certificate-based authentication (or even better, two-factor authentication) would be much better, and if you can push your management to push your users to use it – it is certainly the way to go, but honestly, this is not likely to happen for 99% of the projects out there . Another way is to rely on Facebook/whatever-other-service-everybody-already-has logins – and this is preferable for most of the apps out there, but most likely you will still need to provide an option for the user to use a local account on your own site, and then all the considerations above will still apply. :(

For browser-based apps, the schema would be almost the same, except for replacing ‘Implement Client + Server hashing...’ with:

-

Implement Client + Server hashing without

client_slow_hash, i.e. with P'=P. Configure server-side function to be ‘as slow as feasible’- As of now, scrypt is recommended to be used on both client-side and server-side; if following standards such as NIST is a requirement, PBKDF2 is recommended.

- For the server side, load parameters which are based on the maximum-allowable delay in the worst-possible-case (for example, in the case of a massive reconnect if applicable) for the server-hardware-currently-in-use.

Note that when/if WebCrypto is widely adopted, browser-based apps should also move towards fully implemented Client + Server hashing as described for installable client-apps.

Acknowledgement

Cartoon by Sergey Gordeev from Gordeev Animation Graphics, Prague.

References

[Catena] Forler, Christian, Stefan Lucks, and Jakob Wenzel., ‘Catena: A Memory-Consuming Password Scrambler.’, IACR Cryptology ePrint Archive, 2013

[Contini] Scott Contini, ‘Method to Protect Passwords in Databases for Web Applications’, Cryptology ePrint Archive: Report 2015/387, https://eprint.iacr.org/2015/387

[CppReference] http://en.cppreference.com/w/cpp/numeric/random/rand

[Crackstation] ‘Salted Password Hashing – Doing It Right’, https://crackstation.net/hashing-security.htm

[Fortuna] https://en.wikipedia.org/wiki/Fortuna_%28PRNG%29

[HashCat] ‘hashcat. advanced password recovery’, http://hashcat.net/oclhashcat/

[Loganberry04] David ‘Loganberry’ Buttery, ‘Frithaes! – an Introduction to Colloquial Lapine’, http://bitsnbobstones.watershipdown.org/lapine/overview.html

[NoBugs] ‘No Bugs’ Hare, ‘64 Network DO’s and DON’Ts for Multi-Player Game Developers.Part VIIa: Security (TLS/SSL)’, http://ithare.com/64-network-dos-and-donts-for-multi-player-game-developers-part-viia-security-tls-ssl/

[paj28] http://security.stackexchange.com/questions/58704/can-client-side-hashing-reduce-the-denial-of-service-risk-with-slow-hashes

[PHC] Password Hashing Competition, https://password-hashing.net/index.html

[SgtMaj] ‘Sergeant Major’ Hare, ‘Client-Plus-Server Password Hashing as a Potential Way to Improve Security Against Brute Force Attacks without Overloading the Server’, http://ithare.com/client-plus-server-password-hashing-as-a-potential-way-to-improve-security-against-brute-force-attacks-without-overloading-server/

[WebCrypto] http://www.w3.org/TR/WebCryptoAPI/

[WikiRainbow] ‘Rainbow tables’, Wikipedia, https://en.wikipedia.org/wiki/Rainbow_table

-

Strictly speaking, you need to double-check the documentation of your target distribution to be sure that

/dev/urandomgenerates crypto-quality numbers (or uses [ Fortuna ]), which is common, but not guaranteed. However, I would argue that for the purposes of generating salt S such double-checking is not 100% required. - Strictly speaking, a matching attempted-P may represent a hash collision, if there is more than one attempted-P which corresponds to (S,P') pair. However, for all intents and purposes attempted-P found in this way will be indistinguishable from the real password P; most importantly, it can be used for impersonation. Also after going through the full search space, the real P will be found too.

- Rough calculation: 2 128 =3.4e38. Now let’s assume that there is a billion (1e9) cores on Earth, each able to calculate a billion hashes per second. It would mean that going through the whole 2 128 search space will take 3.4e38/1e9/1e9=3.4e20 seconds, or approx. 1e13 years. As the lifetime of the Sun is currently estimated at about 5e9 years, it means that the sun will have enough time to die 2000 times before the search space is exhausted. And for 2 256 , the situation becomes absolutely hopeless even if each and every atom of the Earth is converted to a core calculating a billion hashes per second.

- While caching users’ credentials to avoid overload at this point is possible, it is quite difficult to implement such a feature without introducing major security holes, and therefore I do not recommend it in general.

- A basic number-crunching crypto-algorithm, acting as a building block for higher-level protocols. Examples of crypto-primitives include AES, SHA256, and [ Catena ]. The problem with introducing a new crypto-primitive is that they’re usually quite difficult to implement properly, so for application-level programmer it is usually better to wait until a crypto library does it for you.