How do you deal with memory for (Re)Actors? Sergey Ignatchenko proposes an allocation scheme.

Disclaimer: as usual, the opinions within this article are those of ‘No Bugs’ Hare, and do not necessarily coincide with the opinions of the translators and Overload editors; also, please keep in mind that translation difficulties from Lapine (like those described in [ Loganberry04 ]) might have prevented an exact translation. In addition, the translator and Overload expressly disclaim all responsibility from any action or inaction resulting from reading this article.

What is it about

As it says on the tin, this article is about allocators within the context of (Re)Actors (a.k.a. Reactors, Actors, ad hoc FSMs, Event-Driven Programs, and so on).

The main benefits we get from our C++ allocator (with (Re)Actors and proposed allocation model as a prerequisite), are the following:

- We have the option of having the best possible performance (same as that of good ol’ plain C/C++)

- Without changing app-level code, we have the option of tracking access to ‘dead’ objects via ‘dangling’ pointers (causing exception rather than memory corruption in the case of such access)

-

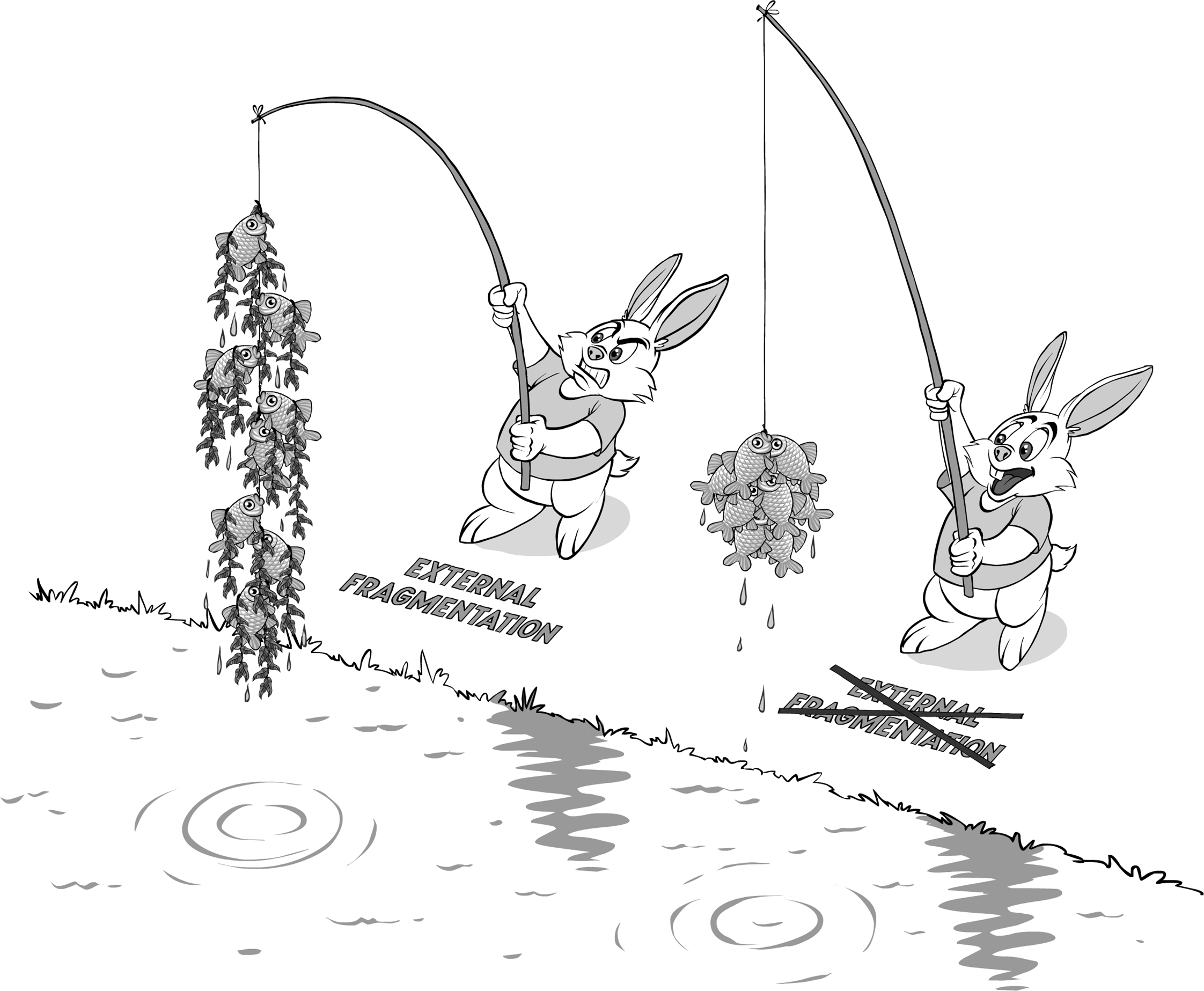

Again, without changing app-level code, we have the option of having a compactable heap. Very briefly, compacting the heap is often very important for long-running programs, as without relocation, programs are known to fall victim to so-called ‘external fragmentation’. Just one very common scenario: if we allocate a million 100-byte small objects, we will use around 25,000 4K CPU pages; then if we randomly delete 900,000 of our 100-byte objects, we’ll still have around 24,600 pages in use (unable to release them back to OS), just because it so happened that each of the remaining 24,600 pages has at least one non-deleted object. Such scenarios are quite common, and tend to cause quite a bit of trouble (in the example above, we’re wasting about 9x more memory than we really need,

plus

we have very poor spatial locality too, which is quite likely to waste cache space and to hurt performance).

- As a side note, many garbage-collected programming languages have been using compactable heaps for ages; I’ve seen this capability to compact used as an argument that garbage-collected languages are inherently better (and an argument against C++).

Let’s note that while what we’ll be doing allows us to achieve benefits which are

comparable

to using traditional non-C++ mark-compact garbage collectors, we’re achieving those benefits in a

significantly different

manner. On the other hand, I don’t want to argue whether what we’re doing really qualifies as ‘automated garbage collection’, or if the name should be different. In the form described in this article, it is not even reference-counted garbage collection (though a similar approach can be applied to allocation models based on

std::shared_ptr<> + std::weak_ptr<>

– as long as we’re staying within (Re)Actors).

What is important though, is to:

-

Significantly reduce chances for errors/mistakes while coding.

-

Within the proposed allocation model, there are no manual

deletes, which should help quite a bit in this regard. - In addition, the handling of ‘dangling’ pointers is expected to help quite a bit too (at least while debugging, but in some cases also in production).

-

Within the proposed allocation model, there are no manual

- Allow for best-possible performance when we need it, while allowing it to be a little bit reduced (but still good enough for most production code) if we happen to need to track some bugs (or to rely on the handling of ‘dangling’ pointers).

- Allow for a compactable heap (again, giving some performance hit compared to the best-possible performance – but the performance hit should usually be mild enough to run our compactable heap in production).

Message-passing is the way to go

Before starting to speak about memory allocation, we need to define what those (Re)Actors we’re about to rely on are about (and why they’re so important).

For a long while, I have been a strong proponent of message-passing mechanisms over mutex-based thread sync for concurrency purposes (starting from [ NoBugs10 ]). Fortunately, I am not alone with such a view; just as one example, the Go language’s concept of “ Do not communicate by sharing memory; instead, share memory by communicating ” [ Go2010 ] is pretty much the same thing.

However, only after returning from ACCU2017 – and listening to a brilliant talk [ Henney17 ] – I realized that we’re pretty much at the point of no return, and are about to reach a kinda-consensus that

Message-passing is THE way to implement concurrency at app-level

(as opposed to traditional mutex-based thread sync).

The reasons for this choice are numerous – and range from “ mutexes and locks are there to prevent concurrency ” (as it was pointed out in [ Henney17 ]), to “ doing both thread sync and app-level logic at the same time tends to exceed cognitive limits of the human brain ” [ NoBugs15 ].

For the time being, it is not clear which of the message passing mechanisms will win (and whether one single mechanism will win at all) – but as I have had very good experiences with (Re)Actors (a.k.a. Actors, Reactors, ad hoc FSMs, and Event-Driven Programs), for the rest of this article I will concentrate on them.

Setting

To be a bit more specific, let’s describe what I understand as (Re)Actors.

Let’s use Generic Reactor as the common denominator for all our (Re)Actors. This Generic Reactor is just an abstract class, and has a pure virtual function

react()

:

class GenericReactor {

virtual void react(const Event& ev) = 0;

}

Let’s name any piece of code which calls GenericReactor’s react() the ‘Infrastructure Code’. Quite often, this call is within the so-called ‘event loop’:

std::unique_ptr<GenericReactor> r

= reactorFactory.createReactor(...);

while(true) { //event loop

Event ev = get_event(); //from select(), libuv, ...

r->react(ev);

}

Let’s note that the

get_event()

function can obtain events from wherever we want – from

select()

(which is quite typical for servers) to libraries such as

libuv

(which is common for clients).

Also let’s note that an event loop, such as the one above, is by far

not

the only way to call

react()

: I’ve seen implementations of Infrastructure Code ranging from one running multiple (Re)Actors within the same thread, to another one which deserialized the (Re)Actor from a DB, then called react(), and then serialized the (Re)Actor back to the DB. What’s important, though, is that even if

react()

can be called from different threads – it MUST be called

as if

it is one single thread (=‘if necessary, all thread sync should be done OUTSIDE of our (Re)Actor, so

react()

doesn’t need to bother about thread sync regardless of the Infrastructure Code in use’).

Finally, let’s name any specific derivative from Generic Reactor (which actually implements our

react()

function), a Specific Reactor:

class SpecificReactor : public GenericReactor {

void react(const Event& ev) override;

};

Also, let’s observe that whenever a (Re)Actor needs to communicate with another (Re)Actor – adhering to the ‘Do not communicate by sharing memory; instead, share memory by communicating’ principle – it merely sends a message, and it is only this message which will be shared between (Re)Actors.

Trivial optimization: single-threaded allocator

Armed with (Re)Actors, we can easily think of a very simple optimization for our allocation techniques. As all the processing within (Re)Actors is single-threaded, we can easily say that:

-

(Re)Actor allocators can be single-threaded (i.e. without any thread sync – and avoiding relatively expensive ‘compare-and-swap’ operations).

- One exception to this is those messages which the (Re)Actor sends to the others – but classes implementing those messages can easily use a different (thread-synced) allocator.

- For the purposes of this article, we’ll say that each (Re)Actor will have its own private (and single-threaded) heap. While this approach can be generalized to per-thread heaps (which may be different from per-(Re)Actor heaps, in cases of multiple (Re)Actors per thread) we won’t do that here.

Ok, let’s write it down that our (Re)Actor allocator is single-threaded – and we’ll rely on this fact for the rest of this article (and everybody who has written a multi-threaded allocator will acknowledge that writing a single-threaded one is a big relief).

However, we’ll go MUCH further than this rather trivial observation.

Allocation model: owning refs, soft refs, naked refs

At this point, we need to note that in C++ (as mentioned, for example, in [ Sutter11 ]), it is impossible to provide compacted heaps “ without at least a new pointer type ”. Now, let’s see what can be done about it.

Let’s consider how we handle memory allocations within our (Re)Actor. Let’s say that within our (Re)Actor:

-

We allow for three different types of references/pointers:

-

‘owning’ references/pointers, which are conceptually similar to

std::unique_ptr<>. In other words, if the ‘owning’ reference object goes out of scope, the object referenced by it is automatically destroyed. For the time being, we can say that ‘owning’ references are not reference-counted (and therefore copying them is prohibited, though moving is perfectly fine – just as withstd::unique_ptr<>). -

‘soft’ pointers/references. These are quite similar to

std::weak_ptr<>(though our ‘soft’ references are created from ‘owning’ references and not fromstd:shared_ptr<>), and to Java WeakRef/SoftRef. However, I don’t want to call them ‘weak references’ to avoid confusion withstd::weak_ptr<>– which is pretty similar in concept, but works only in conjunction withstd::shared_ptr<>, hence the name ‘soft references’.-

Most importantly – trying to dereference (in C++, call an

operator ->(),operator *(), oroperator[]) our ‘soft’ reference when the ‘owning’ reference is already gone is an invalid operation (leading – depending on the mode of operation – to an exception or to UB; more on different modes of operation below).

-

Most importantly – trying to dereference (in C++, call an

- ‘naked’ pointers/references. These are just our usual C/C++ pointers.

-

‘owning’ references/pointers, which are conceptually similar to

- Our (Re)Actor doesn’t use any non-const globals. Avoiding non-const globals is just good practice – and an especially good one in case of (Re)Actors (which are not supposed to interact beyond exchanging messages).

- Now, we’re saying that whatever forms the state of our (Re)Actor (in fact – it is all the members of our SpecificReactor) MUST NOT have any naked pointers or references (though both ‘owning’ and ‘soft’ references are perfectly fine ). This is quite easy to ensure – and is extremely important for us to be able to provide some of the capabilities which we’ll discuss below.

- As for collections – we can easily say that they’re exempt from the rules above (i.e. we don’t care how collections are implemented – as long as they’re working). In addition, memory allocated by collections may be exempt from other requirements discussed below (we’ll note when it happens, in appropriate places).

With this memory allocation model in mind, I am very comfortable to say that

It is sufficient to represent ANY data structure, both theoretically and practically

The theoretical part can be demonstrated by establishing a way to represent an arbitrary graph with our allocation model. This can be achieved via two steps: (a) first, we can replace all the refs in an arbitrary graph by ‘soft’ refs, and (b) second, there is always a set of refs which make all the nodes in the graph reachable exactly once; by replacing exactly this second set of references with our ‘owning’ refs, we get the original arbitrary graph represented with our ‘owning refs’+‘soft refs’.

As for a practical part – IMO, it is quite telling that I’ve seen a very practical over-a-million-LOC codebase which worked exactly like this, and it worked like a charm too.

BTW,

most of the findings in this article are also applicable to a more-traditional-for-C++11-folks allocation model of ‘shared ptr’+‘weak ptr’

(though for single-threaded access, so atomic requirements don’t apply; also, we’ll still need to avoid ‘naked’ pointers within the state of our (Re)Actor). However, it is a bit simpler to tell the story from the point of view of ‘owning’ refs +‘soft’ refs, so for the time being we’ll stick to the memory allocation model discussed above.

An all-important observation

Now, based on our memory allocation model, we’re able to make an all-important

Observation 1. Whenever our program counter is within the Infrastructure Code but is outside of

react()

, there are no ‘naked pointers’ to (Re)Actor’s heap.

This observation directly follows from a prohibition on having ‘naked pointers’ within (Re)Actor’s state: when we’re outside of

react()

, there are no ‘naked pointers’ (pointing to the heap of our (Re)Actor) on the stack; and as there are no non-const globals, and there are ‘naked pointers’ within the heap itself either – well, we’re fine.

Modes of operation

Now, let’s see what how we can implement these ‘owning refs’ and ‘soft refs’. Actually, the beauty of our memory model is that it describes WHAT we’re doing, but doesn’t prescribe HOW it should be implemented. This leads us to several possible implementations (or ‘modes of operation’) for ‘owning refs’/‘soft refs’. Let’s consider some of these modes.

‘Fast’ mode

In ‘Fast’ mode, ‘owning refs/pointers’ are more or less

std::unique_ptr<>

s – and ‘soft refs/pointers’ are implemented as simple ‘naked pointers’.

With this ‘fast’ mode, we get the best possible speed, but we don’t have any safety or reallocation goodies. Still, it might be perfectly viable for some production deployments where speed is paramount (and crashes are already kinda-ruled out by thorough testing, running new in production in ‘safe’ mode for a while, etc. etc.).

‘kinda-Safe’ mode

In a ‘kinda-Safe’ mode, we’ll be dealing with ‘dangling pointers’; the idea is to make sure that ‘dangling pointers’ (if there are any) don’t cause memory corruption but cause an exception instead.

First of all, let’s note though that because of the semantics of ‘owning pointers’, they cannot be ‘dangling’, so we need to handle only ‘soft’ and ‘naked’ pointers, and references.

‘Dangling’ soft references/pointers

To deal with ‘dangling’ soft-pointers/references, we could go the way of double-reference-counting (similar to the one done by

std::weak_ref<>

– which actually uses the ages-old concept of

tombstones

), but we can do something better (and BTW, the same technique

might

be usable to implement

std::weak_ref<>

too – though admittedly generalizing our technique to multi-threaded environment is going to be non-trivial).

Our idea will be to:

-

Say that our allocator is a ‘bucket allocator’ or ‘slab allocator’. What’s important is that if there is an object at memory address X, then there cannot be an object crossing memory address X,

ever

.

- Let’s note that memory allocated by collections for their internal purposes is exempt from this requirement (!).

- Say that each allocated object has an ID – positioned right before the object itself. IDs are just incremented forever-and-ever for each new allocation (NB: 64-bit ID, being incremented 1e9 times per second, will last without wraparound for about 600 years – good enough for most of the apps out there if you ask me).

- Each of our ‘owning refs’ and ‘soft refs’, in addition to the pointer, contains an ID of the object it is supposed to point to.

-

Whenever we need to access our ‘owning ref’ or ‘soft ref’ (i.e. we’re calling

operator ->()oroperator *()to convert from our ref to naked pointer), we’re reading the ID from our ref, AND reading the ID which is positioned right before the object itself – and comparing them. If there is a mismatch, we can easily raise an exception (as the only reason for such a mismatch is that the object has been deleted).- This approach has an inherent advantage over a tombstone-based one: as we do not need an extra indirection – this implementation is inherently more cache friendly. More specifically, we’re not risking an extra read from L3 cache or, Ritchie forbid, from main RAM, and the latter can take as much as 150 CPU cycles easily. On the other hand, for our ID-reading-and-comparing, we’ll be usually speaking only about the cost of 2–3 CPU cycles.

NB: of course, it IS still possible to use double-ref-counting/tombstones to implement ‘kinda-Safe mode’ – but at this time, I prefer an ID-based implementation as it doesn’t require an extra indirection (and such indirections, potentially costing as much as 150 cycles, can hurt performance pretty badly). OTOH, if it happens that for some of the real-world projects tombstones work better, it is always still possible to implement ‘kinda-Safe mode’ via a traditional tombstone-based approach.

‘Dangling’ naked references/pointers

With naked references/pointers – well, strictly speaking, we cannot provide strict guarantees on their safety (that’s why the mode is ‘kinda-Safe’, and not ‘really-Safe’). However, quite a few measures are still possible to both detect such accesses in debugging, and to mitigate the impact if it happens in production:

- Most importantly, our allocation model already has a restriction on life time of ‘naked’ pointers, which already significantly lowers the risks of ‘naked’ pointers dangling around.

-

In addition, we can ensure that within our (Re)Actor allocator, we do NOT really free memory of deleted objects (leaving them in a kind of ‘zombie’ state) – that is, until we’re out of the

react()function. This will further reduce risks of memory corruption due to a ‘dangling’ pointer (just because within our memory allocation model, all the dangling naked pointers will point to ‘zombie’ objects and nothing but ‘zombie’ objects). As for increased memory usage due to delayed reclaiming of the memory – in the vast majority of use cases, it won’t be a problem because of a typicalreact()being pretty short with relatively few temporaries.-

In debug mode, we may additionally fill deleted objects with some garbage. In addition, when out of

react(), we can detect that the garbage within such deleted objects is still intact; for example, if we filled our deleted objects with 0xDEAD bytes, we can check that after leavingreact()deleted objects still have the 0xDEAD pattern – and raise hell if they don’t (messing with the contents of supposedly deleted objects would indicate severe problems within the last call toreact()). -

In production mode, we can say that our destructors leave our objects in a ‘kinda-safe’ state; in particular, ‘kinda-safe’ state may mean that further pointers (if any) are replaced with

nullptrs (and BTW, within our memory allocation model, this may be achieved by enforcing that destructors of ‘owning pointers/refs’ and ‘soft pointers/refs’ are setting their respective pointers tonullptrs; implementing ‘kinda-safe’ state of collections is a different story, though, and will require additional efforts).- This can help to contain the damage if a ‘dangling’ pointer indeed tries to access such a ‘zombie’ object – at least we won’t be trying to access any further memory based on garbage within the ‘zombie’.

-

In debug mode, we may additionally fill deleted objects with some garbage. In addition, when out of

‘Safe with relocation’ mode

In a ‘Safe with relocation’ mode, in addition to dealing with ‘dangling’ soft refs, we’ll be allowing to relocate our allocated objects. This will allow us to eliminate dreaded ‘external fragmentation’ – which tends to cause quite a bit of trouble for long-running systems – with lots of CPU pages having a single object in them being allocated some memory (which in turn, if we cannot possibly relocate those single objects, tends to cause lots of memory waste).

To implement relocation, in addition to the trickery discussed for ‘Safe’ mode, we’ll be doing the following:

-

All relocations will happen only outside of the

react()function (i.e. when there are no ‘naked’ pointers to the heap, phew)- How exactly to relocate objects to ensure freeing pages is outside the scope of this article; here, we are concentrating only on the question of how to ensure that everything works after we’re done relocating some of our objects

-

Keep a per-(Re)Actor-heap ‘relocation map’ – a separate map of object IDs (the ones used to identify objects, as discussed in ‘Safe’ mode) into new addresses.

-

To keep the size of ‘relocation map’ from growing forever-and-ever, we could:

- For each of our heap objects, keep a counter of all the ‘owning’ and ‘soft’ pointers to the object.

- Whenever we relocate object, copy this counter to the ‘relocation map’. Here, it will have the semantics of ‘remaining pointers to be fixed’.

- Whenever we update our ‘owning’ or ‘soft’ pointer as described below, decrement the ‘remaining pointers to be fixed’ counter (and when it becomes zero, we can safely remove the entry from our ‘relocation map’).

- An alternative (or complementing) approach is to rely on ‘traversing’, as described below.

- Exact implementation details of the ‘relocation map’ don’t really matter much; as it is accessed only very infrequently, search times within it are not important (though I am not saying we should use linear search there).

-

To keep the size of ‘relocation map’ from growing forever-and-ever, we could:

-

Whenever we detect access to a non-matching object ID (i.e. an ‘owning pointer’ or ‘soft pointer’ tries to convert to a ‘naked’ pointer and finds out that the object ID in heap is different from the ID they have stored), instead of raising an exception right away, we’ll look into the ‘relocation map’ using the object ID within the pointer trying to access the object, and then:

- If the object with such an object ID is found in the ‘relocation map’, we update our ‘owning pointer’ or ‘soft pointer’ to a new value and continue.

- If the object with the ID within the pointer is not found, the object has been deleted, so we raise exception to indicate access attempt to a deleted object (just as for ‘safe mode’ above).

-

If our relocation has led to a page being freed (and decommitted), attempts to dereference ‘owning pointers’ or ‘soft pointers’ may cause a CPU access violation. In such cases, we should catch the CPU exception, and once again look into our ‘relocation map’ using exactly the same logic as above (and causing either updating the current pointer, or an app-level exception).

-

To make sure that our system works as intended (and that all the pointers can still rely on an object ID always being before the object), we need to take the following steps:

- After decommitting the page, we still need to keep address space for it reserved.

- In addition, we need to keep track of such decommitted-but-reserved pages in a some kind of ‘page map’, and make sure that if we reuse the same page, we use it only for allocations of exactly the same ‘bucket size’ as before.

- While this might sound restrictive, for practical x64 systems it is usually not a big deal because (as we’re decommitting the page) we’ll be wasting only address space, and not actual memory . As modern x64 OSs tend to provide processes with 47-bit address space, this means that for a program which uses not more than 100G of RAM at any given time, and uses 100 different bucket sizes, in the very worst case, we’ll waste at most 10000G of address space, and this is still well below that 47-bit address space we normally have.

-

To make sure that our system works as intended (and that all the pointers can still rely on an object ID always being before the object), we need to take the following steps:

Bingo! We’ve got (kinda-)safe implementation – and with the ability to compact our heap too, if we wish.

Traversing SpecificReactor state

In spite of all our efforts discussed above, in certain cases, there might be situations when the size of our ‘page map’ and especially ‘relocation map’ will grow too large. While I expect such situations to be extremely rare, it is still nice to know that there is a way to handle them.

If we say that for every object within our class

SpecificReactor

, there is a

traverse()

function (with

traverse()

at each level doing nothing but calling

traverse()

for each of child objects) then after calling

traverse()

for the whole

SpecificReactor

, we can be sure that

all

the pointers have been dereferenced, and therefore were fixed if applicable; as a result – after such a

traverse()

– our ‘relocation map’ is no longer necessary and can be cleaned (BTW, if we’re doing

traverse()

frequently enough, we may avoid storing the reference count, which was mentioned above in the context of cleaning up the ‘relocation map’).

Moreover, after such a call to

SpecificReactor::traverse()

, we can be sure that there are no more pointers to decommitted pages, which means that ‘page map’ can be cleaned too.

On the one hand, let’s note that for (Re)Actors with a large state, traversing the whole state may take a while (especially if the state is large enough to spill out of the CPU caches) – which may be undesirable for latency-critical apps. On the other hand, in such cases it is usually possible to implement traversing in an incremental manner (relying on the observation that any newly created objects are not a problem) – but all methods I know for such incremental traversals require us to be very careful about object moves (from a not-traversed-yet into a supposedly-already-traversed area) and about invalidating collection iterators. Still, it is usually possible and fairly easy to write such an incremental traversal – albeit an ad hoc one (i.e. taking the specifics of the app into account).

Further discussion planned

Actually, this is not the end of discussion about (Re)Actors and their allocators. In particular, I hope to discuss how to use such allocators to implement (Re)Actor serialization (and as mentioned in [ NoBugs17 ], serialization of the (Re)Actor state is necessary to achieve quite a few (Re)Actor goodies, including such big things as Replay-Based Regression Testing and production post-factum debugging).

Cartoon by Sergey Gordeev from Gordeev Animation Graphics, Prague

References

[Go2010] ‘Share Memory By Communicating’, The Go Blog, https://blog.golang.org/share-memory-by-communicating

[Henney17] Kevlin Henney, ACCU2017, ‘Thinking Outside the Synchronisation Quadrant’

[Loganberry04] David ‘Loganberry’, Frithaes! – an Introduction to Colloquial Lapine!, http://bitsnbobstones.watershipdown.org/lapine/overview.html

[NoBugs10] ‘No Bugs’ Hare, ‘Single Threading: Back to the Future?’, Overload #97–98, June–Aug 2010

[NoBugs15] ‘No Bugs’ Hare, ‘Multi-threading at Business-logic Level is Considered Harmful’, Overload #128, Aug 2015

[NoBugs17] ‘No Bugs’ Hare, ‘Deterministic Components for Interactive Distributed Systems’, ACCU2017, http://ithare.com/deterministic-components-for-interactive-distributed-systems-with-transcript/

[Sutter11] Herb Sutter, ‘Garbage Collection Synopsis, and C++’. https://herbsutter.com/2011/10/25/garbage-collection-synopsis-and-c/