Design issues cause problems. Charles Tolman considers an organising principle to get to the heart of the matter.

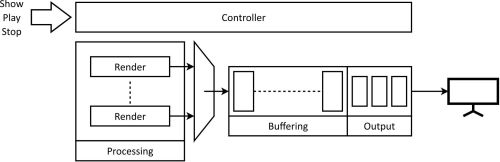

I want to underpin the philosophical aspect of this discussion by using an example software architecture and considering some design problems that I have experienced with multi-threaded video player pipelines. The issues I highlight could apply to many video player designs.

Figure 1 is a highly simplified top-level schematic, the original being just an A4 pdf captured from a whiteboard, a tool that I find much better for working on designs than using any computer-based UML drawing tool. The gross motor movement of hand drawing ‘in the large’ seems to help the thinking process.

|

| Figure 1 |

There are 3 basic usual commands for controlling any video player that has random access along a video timeline:

- Show a frame

- Play

- Stop

In this example, there is a main controller thread that handles the commands and controlling the whole pipeline. I am going to conveniently ignore the hard problem of actually reading anything off a disk fast enough to keep a high resolution high frame-rate player fed with data.

The first operation for the pipeline to do is to render the display frames in a parallel manner. The results of these parallel operations, since they will likely be produced out of order, need to be made into an ordered image stream that can then be buffered ahead to cope with any operating system latencies. The buffered images are transferred into an output video card, which has only a relatively small amount of video frame storage. This of course needs to be modeled in the software so that (a) you know when the card is full; and (b) you know when to switch the right frame to the output without producing nasty image tearing artefacts.

These are all standard elements you will get with many video player designs, but I want to highlight three design issues that I experienced in order to get an understanding of what I will later term an ‘Organising Principle’.

First there was slow operation resulting in non real-time playout. Second, occasionally you would get hanging playout or stuttering frames. Third, you could very occasionally get frame jitter on stopping.

Slow operation

Given what I said about Goethe and his concept of Delicate Empiricism, the very first thing to do was to reproduce the problem and collect data, i.e. measure the phenomenon WITHOUT jumping to conclusions. In this case it required the development of logging instrumentation software within the system – implemented in a way that did not disturb the real-time operation.

With this problem I initially found that the image processing threads were taking too long, though the processes were doing their job in time once they had their data. So it was slowing down before they could get to start their processing.

The processing relied on fairly large processing control structures that were built from some controlling metadata. This build process could take some time so these structures were cached with their access keyed by that metadata, which was a much smaller structure. Accessing this cache would occasionally take a long time and would give slow operation, seemingly of the image processing threads. This cache had only one mutex in its original design and this mutex was locked both for accessing the cache key and for building the data structure item. Thus when thread A was reading the cache to get at an already built data item, it would occasionally block behind thread B which was building a new data item. The single mutex was getting locked for too long while thread B built the new item and put it into the cache.

So now I knew exactly where the problem was. Notice the difference between the original assumption of the problem being with the image processing, rather than with the cache access.

It would have been all too easy to jump to an erroneous conclusion, especially prevalent in the Journeyman phase, and change what was thought to be the problem. Although such a change would not actually fix the real issue, it could have changed the behaviour and timing so that the problem may not present itself, thus looking like it was fixed. It would likely resurface months later – a costly and damaging process for any business.

In this case the solution here was to have finer grained mutexes: one for the key access into the cache and a separate one for accessing the data item. On first access the data item would be lazily built and thus needed a second mutex to protect the write(build) before read access.

Hanging playout or stuttering frames

The second bug was that the playout would either hang or stutter. This is a good example because it illustrates a principle that we need to learn when dealing with any streamed playout system.

The measurement technique in this case was extremely ‘old school’, simply printing data to a log output file. Of course only a few characters were output per frame, because at 60fps (a typical modern frame-rate) you will only have 16ms per frame.

In this case the streaming at the output end of the pipeline was happening out of order, a bad fault for a video playout design. Depending upon how the implementation was done, it would either cause the whole player to hang or produce a stuttered playout. Finding the cause of this took a lot of analysis of the output logs and many changes to what was being logged. An example of needing to be clear about the limits of one’s knowledge and of properly identifying the data that next needed to be collected.

I found that there was an extra ‘hidden’ thread added within the output card handling layer in order to pass off some other output processing that was required. However it turned out that there was no enforcement of frame streaming order. This meant that the (relatively) small amount of memory in the output card would get fully allocated and this would give rise to a gap in the output frame ordering. The output control stage was unable to fill the gap in the frame sequence with the correct frame, because there was no room in the output card for that frame. This would usually result in the playout hanging.

This is why with a streaming pipeline, where you always have limited resources at some level, allocation of those resources must be done in streaming order. This is a dynamic principle that can take a lot of hard won experience to learn.

The usual Journeyman approach to such a problem is just to add more memory, i.e. more resource! This will hide the problem because though processing is still done out of order, the spare capacity has been increased and it will not go wrong until you next modify the system to use more resource. At this point the following statement is usually made:

But this has been working ok for years!

The instructions I need to tell less experienced programmers when trying to debug such problems will usually include the following:

- Do NOT change any of the existing functionality.

- Disturb the system as little as possible.

- Keep the bug reproducible so you can measure what is happening.

Then you will truly know when you have fixed the fault.

Frame jitter on stop

The third fault case was an issue of frame jitter when stopping playout. The problem was that although the various buffers would get cleared, there could still be some frames ‘in flight’ in the handover threads. This is a classic multi-threading problem and one that needs careful thought.

In this case when it came time to show the frame at the current position, an existing playout had to be stopped and the correct frame would need to be processed for output. This correct frame for the current position would make its way through to the end of the pipeline, but could get queued behind a remnant frame from the original stopped playout. This remnant frame would most likely have been ahead of the stop position because of the pre-buffering that needed to take place. Then when it came time to re-enable the output frame viewing in order to show the correct frame, both frames would get displayed, with the playout remnant one being shown first. This manifested on the output as a frame jitter.

One likely fix of an inexperienced programmer would be to make the system sit around waiting for seconds while the buffers were cleared and possibly cleared again, just in case! (The truly awful ‘sleep’ fix.) This is one of those cases where, again due to lack of deep analysis, a defensive programming strategy is used to try and force a fix of what initially seems to be the problem. Again, it is quite likely that this may seem to fix the problem, and will probably happen if the developer is under heavy time pressure, but this would be an example where the best practice of taking time to be properly understand the failure mode in a Delicately Empirical way would be compromised by a rush to a solution.

The final solution to this particular problem was to use the concept of uniquely identified commands, i.e. ‘command ids’. Thus each command from the controlling thread, whether it was a play request or a show frame request, would get a unique id. This id was then tagged on to each frame as it was passed through the pipeline. By using a low-level globally accessible (within the player) ‘valid command id set’ the various parts of the pipeline could decide, by looking at the tagged command id, if they had a valid frame that could be allowed through or quietly ignored.

When stopping the playout all that had to be done was to clear the buffers, remove the relevant id from the ‘valid command id set’ and this would allow any pesky remaining ‘in flight’ frames to be ignored since they had an invalid command id. This changed the stop behaviour from being an occasional, yet persistent bug, into a completely reliable operation and without the need to use ‘sleep’ calls anywhere.

Hidden organising principles

In conclusion the above issues dealt with the following design ideas:

- Separating Mutex Concerns.

- Sequential Resource Allocation.

- Global Command Identification.

But I want to characterize these differently because the names sound a little like pattern titles. Although as a software community we have had success using the idea of patterns I think the concept has become rather more fixed than Christopher Alexander may have intended. Thus I will rename the solutions as follows in order to expressly highlight their dynamic behavioural aspect:

- Access Separation.

- Sequential Allocation.

- Operation Filtering.

You might have noticed in the third example the original concept of ‘Global Command Identification’ represents just one possible way to implement the dynamic issue of filtering operations. Something it has in common with much of the published design pattern work where specific example solutions are mentioned. To me design patterns represent a more fixed idea that is closer to the actual implementation.

But there is a difference between the architecture of buildings – where design patterns originated – and the architecture of software. Although both deal with the design of fixed constructs, whether it be a building or code, the programmer has to worry far more about the dynamic behaviour of the fixed construct (their code). Yes – a building architect does have to worry about the dynamic behaviour of people inhabiting their design, but software is an innately active artefact.

Though others may come up with a better naming for the behavioural aspects of the ideas, I am trying to use a more mobile and dynamic definition of the solutions. Looking at the issues in this light starts to get to the core of why it is so hard to develop an architectural awareness. We have to deal with a far more, in philosophical terms, ‘phenomenological’ set of thoughts. This is why the historical philosophical context can enlighten the problematic aspects of our programming careers.

We need to move our thinking forward and understand the ‘Organising Principles’ that live behind the final design solutions, i.e. how the active processes need to run. Being able to perceive and ‘livingly think’ these mobile thought structures is what we need to do as we make our way to becoming accomplished programmers.

A truly understood concept of the mobile Organising Principle does not really represent design patterns – not in the way we have them at the moment. It is NOT a static thing. It cannot be written down on a piece of paper. Although it informs any software implementation it cannot be put into the code. If you fix it: You Haven’t Got It. Remember that phrase because truly getting and understanding it is the real challenge. The Organising Principle lives behind the parts of any concrete implementation.

Despite the slippery nature of the Organising Principle, I shall attempt to explore this more in a subsequent article and give some ideas about what we can do as programmers to improve such mobile perception faculties.